Datasets

Standard Dataset

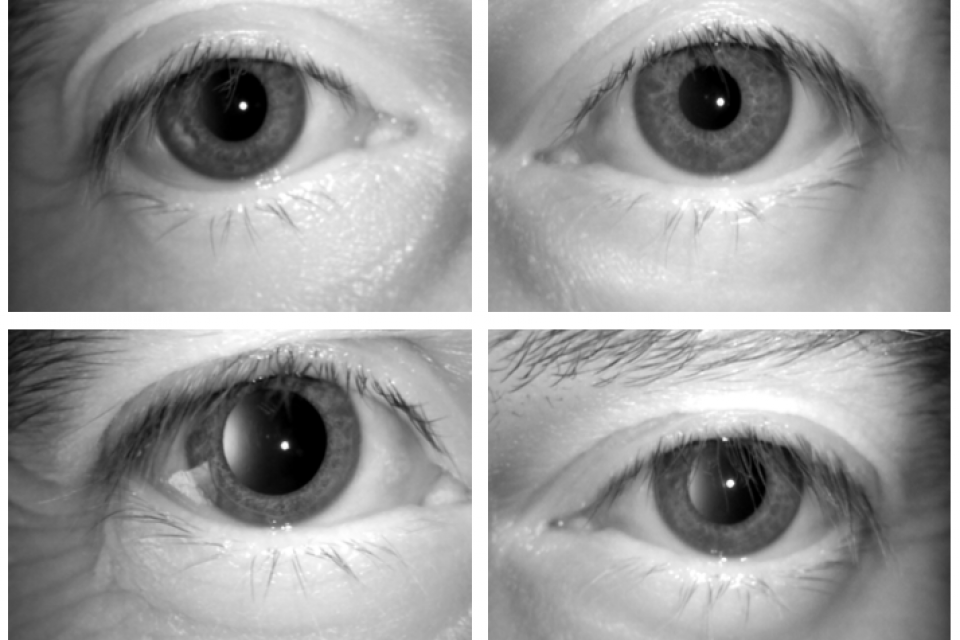

NIR Iris images Under Alcohol Effect

- Citation Author(s):

- Submitted by:

- JUAN TAPIA

- Last updated:

- Tue, 07/04/2023 - 06:50

- DOI:

- 10.21227/dzrd-p479

- Research Article Link:

- Links:

- License:

1857 Views

1857 Views- Categories:

- Keywords:

Abstract

This research proposes a method to detect alcohol consumption from Near-Infra-Red (NIR) periocular eye images. The study focuses on determining the effect of external factors such as alcohol on the Central Nervous System (CNS). The goal is to analyse how this impacts on iris and pupil movements and if it is possible to capture these changes with a standard iris NIR camera. This paper proposes a novel Fused Capsule Network (F-CapsNet) to classify iris NIR images taken under alcohol consumption subjects. The results show the F-CapsNet algorithm can detect alcohol consumption in iris NIR images with an accuracy of 92.3% using half of the parameters as the standard Capsule Network algorithm. This work is a step forward in developing an automatic system to estimate ”Fitness for Duty” and prevent accidents due to alcohol consumption.

****This dataset is only for research purposes. Commercial approaches are not allowed.

5 sessions of image capturing were done for each person at intervals of 00, 15, 30, 45 and 60 minutes after having ingested alcohol.

There are different people for each folder "Grupo_X". Each one has a folder with the name of the sensors used in the capturing session (LG/Iritech). Each sensor folder has 5 inner folders for each interval (in minutes) in the capturing session.

A total of 600 images of volunteers not under the influence of alcohol were captured and 2,400 images were taken after each volunteer had ingested 200 ml of alcohol (Images taken in intervals of 15 minutes after consumption).

The database was divided into 70% and 30% for Training and Testing. The partition is a subject-disjoint database.

Annotated information.

As a piece of additional information, the coordinates of the iris, pupil and sclera were included to be used in segmentation.

Each folder of each session has a JSON file which has manual annotations of every region of interest of the eye (pupil, iris, sclera)

VIA v2.0.5 was used to label each ROI of the eyes.

Each ROI of each image was labelled with distinct figures disposed of in the VIA program (polygon, ellipse, circle) specified in JSON files.

Polygon figures have coordinates set like (x, y) points.

Circle figures have the centre denoted as (x, y) coordinates and their radius (r).

Ellipse figures have the centre denoted as (x, y) coordinates, the minor and the major radii.

File "eye_attributes.json" correspond to the region/file attribute of the VIA program needed to identify each ROI.

****This dataset is only for research purposes. Commercial approaches are not allowed.

More from this Author

Comments

Update dataset.