Dataset for IoT Assisted Human Posture Recognition

- Citation Author(s):

-

Ritwik Duggal (National Institute of Technology, Hamirpur)Jahnvi Gupta (National Institute of Technology, Hamirpur)Mukesh Kumar (National Institute of Technology, Hamirpur)Joel J.P.C. Rodrigues (Fellow IEEE Post-Graduation Program in Electrical Engineering (PPGEE), Federal University of Piau´ı, Teresina 64049-550, Brazil and Instituto de Telecomunicac¸oes, 1049-001 Lisbon, Portugal)

- Submitted by:

- Ritwik Duggal

- Last updated:

- DOI:

- 10.21227/gk5q-gw14

- Data Format:

4058 views

4058 views

- Categories:

- Keywords:

Abstract

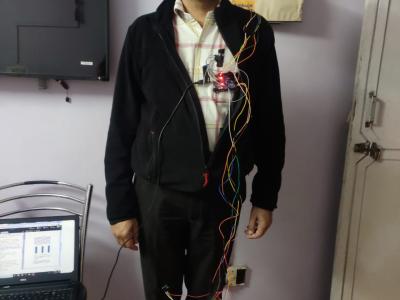

The ability of detecting human postures is particularly important in several fields like ambient intelligence, surveillance, elderly care, and human-machine interaction. Most of the earlier works in this area are based on computer vision. However, mostly these works are limited in providing real time solution for the detection activities. Therefore, we are currently working toward the Internet of Things (IoT) based solution for the human posture recognition. To achieve the objective, in the first phase, dataset of 37419 samples has been prepared consisting of healthy and unhealthy postures with sitting, standing and sleeping activities. In the second phase, this dataset will be used to classify different human postures as healthy and unhealthy using machine learning algorithm.

Instructions:

Each sample consists a total of 20 attributes including 9 accelerometer readings(3 readings each from knee, chest and back),9 gyroscope readings(3 readings each from knee, chest and back), activity(sitting, standing or sleeping and type(healthy or unhealthy). Figure 3 shows a small sample of the dataset. Considering the first row of the dataset, the accelerometer measure the acceleration along x, y and z axis giving 3 values at body location where sensor is placed(ax,ay,and az). Attribute chest ax=8476 gives the acceleration value along the x-axis when the sensor is placed on the chest. Similarly, chest ay=936 and chest az=252 gives the acceleration value along the y and z-axis respectively when the sensor is placed on the chest. The gyroscope measures the angular velocity along x,y and z direction(gx, gy and gz). Further, chest gx = 757 gives the angular velocity along the x-axis, chest gy=708 and chest gz=231 gives the angular velocity along the y and z-axis, when the sensor is placed on the chest. Knee ax = -124 gives the acceleration value along the x-axis, knee ay=6672 and knee az=-3560 gives the acceleration value along the y and z-axis respectively when the sensor is placed on the knee. Knee gx = -261 gives the angular velocity along the x-axis, when the sensor is placed on the knee. back ax = 324 gives the acceleration value along the x-axis when the sensor is placed on the back. Similarly, back ay=7932 and back az=2664 gives the acceleration value along the y and z-axis respectively and back gx = -496 gives the angular velocity along the x-axis, when the sensor is placed on the back.Similarly, back gy=222 and back gz=46 gives the angular velocity along the y and z-axis, when the sensor is placed on the back. Further, Type determines whether the data collected is of a healthy person or unhealthy person on the basis of posture. In our case it’s healthy. Activity determines whether the posture is of sitting, lying or standing respectively, in our case it is sitting.

In reply to I cant access the dataset ? by Madhukar Poojary

In reply to Does each row in the dataset by Charles Harrison

In reply to Does each row in the dataset by Charles Harrison