IREYE4TASK

- Citation Author(s):

-

Siyuan ChenJulien Epps

- Submitted by:

- Siyuan Chen

- Last updated:

- DOI:

- 10.21227/qe5t-d517

- Data Format:

- Research Article Link:

1107 views

1107 views

- Categories:

- Keywords:

Abstract

IREYE4TASK is a dataset for wearable eye landmark detection and mental state analysis. Sensing the mental state induced by different task contexts, where cognition is a focus, is as important as sensing the affective state where emotion is induced in the foreground of consciousness, because completing tasks is part of every waking moment of life. However, few datasets are publicly available to advance mental state analysis, especially those using the eye as the sensing modality with detailed ground truth for eye behaviors. Here, we share a high-quality publicly accessible eye video dataset, IREye4Task, where the eyelid, pupil and iris boundary as well as six eye states, four mental states in two load levels, and before and after experiment are annotated for each frame to obtain eye behaviors as responses to different task contexts, over more than a million frames. This dataset provides an opportunity to recognize eye behaviors from close-up infrared eye images and examine the relationship between eye behaviors, different mental states and task performance.

Instructions:

This dataset is for research use only.

Participants

Twenty participants (10 males, 10 females; Age: M = 25.8, SD = 7.17) above 18 years old volunteered. All participants had normal or corrected to normal vision with contact lenses and had no eye diseases causing obvious excessive blinking. They signed informed consent before the experiment and were unaware of the precise experimental hypotheses. All procedures performed in this study were approved by the University of New South Wales research ethics committee in Australia and were in accordance with the ethical standards.

Environment

A research lab where lights were uniformly distributed in the ceiling and the ripple-free lighting condition in the room was constant. The room is surrounded by dark drapes on the three sides of walls and one white wall with a window to another room (not lights come to the window). Two chairs and one table were set up in one side of the room where the participant was sitting at the table using a laptop and the experimenter was sitting opposite and using another laptop to conduct conversation as needed. Another table and chair were placed on the other side of the room where the participant was requested to walk to from their table and back. Detailed experimental setting can be found in Figure 1 in [1].

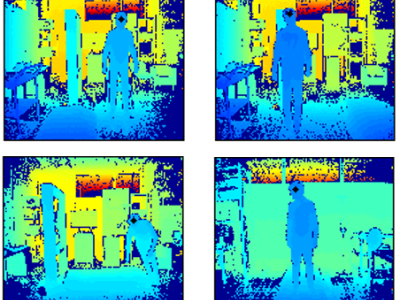

Apparatus

A wearable headset from Pupil Labs [2] was used to record left and right eye video, 640 × 480 pixels at 60 Hz, and a scene video, 1280 × 720 pixels at 30 Hz. The headset was connected to a lightweight laptop via USB, so the three videos were recorded and stored in the laptop. The laptop was placed into a backpack and participants carried it during the experiment so that their movement was not restricted.

Experimental design

Task instructions were displayed on a 14-inch laptop, which was placed around 20-30 cm away from the participants seated at a desk. Participants used a mouse or touch pad to click the button shown on the laptop screen to choose the answer (for tasks requiring a response via the laptop) or proceed to the next task. Meanwhile, to reduce the pupillary light reflex effect on pupil size change, they were instructed to always fixate their eyes on the screen and not to look around during the experiment. However, during the physical load task, their eyes were naturally on the surroundings, but they followed the same walking path for the low and high load levels.

The four types of tasks were used to induce the four different load types by ensuring the dominant load and manipulating the dominant load level given the same of other load types. They were modified based on the experiment in [4]. The cognitive load task required summing two numbers which were displayed sequentially on a screen (rather than presented simultaneously in [4]) and giving the answer verbally when ready after clicking a button on the screen. The perceptual load task was to search for a given first name (rather than an object in [4]), which was previously shown on the screen, from a full-name list and click the name. The physical load task was to stand up and walk from the desk to another desk (around 5 metres) and walk back and sit down (rather than lifting in [4]). The communicative load task was to hold conversations with the experimenter to complete a simple conversation or an object guessing game (different questions and guessing objects in [4]).

Before the experiment, participants had a training session where they completed an example of each load level of each task type to get familiarised with these tasks. Then they put on the wearable devices and data collection started. There were four blocks corresponding to the four types of tasks: five addition tasks in the block of cognitive load tasks, two search tasks in the block of perceptual load tasks, one physical task in the block of physical load tasks, and 10 questions to answer or ask in the block of communicative load tasks. The procedure aimed to make participants spend a similar time completing each block. The order of the 8 blocks (4 blocks × 2 levels) was generated randomly beforehand and was the same for every participant. After the 8 blocks, participants repeated another set of 8 blocks but in a different order and with different adders, target names, and questions. At the end of each block, there was a subjective rating (on a 9-point scale) of their effort on the completed task followed by a pause option which allowed them to take a break if needed. The subjective rating consists of a series of nine choices representing degrees of effort from 'Nearly max, Very much, Much’ to ‘Medium to much, Medium, Medium to little’ to ‘A little, Little, Very little’ with the interval being as subtle and equal as possible. The session lasted around 13 to 20 min in total.

Detailed experimental design can be found in Figure 2 in [1].

Data Records

1. The right eye video (eye0_sub*.mp4) was selected for use because it showed better image quality for most participants. Three participants’ left eye video (eye1_sub*.mp4) was used when their right eye video was corrupted (i.e., file could not be opened) or their eye was out of the camera scope when wide open.

Note sub15 and sub20 did not consent to share their eye videos, so there are no eye0_sub15.mp4 and eye0_sub20.mp4 in this public dataset. Eye0_sub9.mp4 is short than other videos because the recording device stopped for unknown reason.

2. Eye?_sub*.txt from 20 participants contains 58 columns. Column 1 is the timestamp of video frame of Eye?_sub*.mp4 obtained from PupilLabs recording files. Column 2 is eye state (0-5). Column 3 to 30 are the 28 eye landmarks position in x axis. Column 31 to 56 are the 28 eye landmarks position in y axis. An example of matlab code to show eye image and eye landmarks is provided in ‘process_eye_landmarks.m’.

Note the timestamp is from PupuilLabs recording file rather than the video itself. The definition of eye state and the order of the eye landmarks can be found in Table 4 in [3].

3. Tasks_sub*.csv from 20 participants contains two columns. Column 1 is task block. They are denoted as:

'befExp'-before the first task.

'aftExp'-after all tasks finished.

‘cogH’-arithmetic task in high load level.

‘cogL’-arithmetic task in low load level.

‘perH’-search task in high load level.

‘perL’-search task in low load level.

‘phyH’-walking task in high load level (high speed).

‘phyL’-walking task in low load level (low speed).

‘comH’-conversation task in high load level (ask a question then listen ‘yes-no’ answer from the experimenter).

‘comL’-conversation task in low load level (listening a question from the experimenter then answer yes or no).

'pause'-between two tasks or a break, no task stimuli.

Column 2 is exactly what the participant is doing in the task block. They are denoted as:

'Task'-the main task. If it is ‘cog’, it is calculating. If it is ‘per’, it is searching. If it is ‘phy’ it is physical movement i.e., stand up and walk to the table then walk back and sit down’.

'lisnH'-listening a question from the experimenter.

'lisnL'-listening a ‘yes-no’ answer from the experimenter.

'spkH'-ask a question.

'spkL'-answer yes or no.

'pause'-no task stimuli or between a task end and next task beginning.

'preTask'-read instruction on laptop screen before the main task.

'rating'-rate mental effort after the main task.

Note details of the task procedure can be in found in Figure 2 in [1]. The row in Tasks_sub*.csv is corresponding to the row in Eye?_sub*.txt.

4. process_eye_landmarks.m provides an example of overlaying eye landmarks in eye videos using MATLAB.

5. EyeSize_Age_CogPerformance.csv provides the eye length, age and gender of the 20 participants as well as their task performance (0-wrong; 1-correct) of the arithmetic (cogL and cogH) and search tasks (perL and perH).

Task conditions segments, eye landmarks and eye state annotation, and mental state ground truth

The task labels were automatically obtained from the task stimuli presentation interface which recorded when task stimuli were presented and when participants clicked a button to begin and end each task. The synchronization between the eye videos and the presentation interface is through a long blink at the beginning of the experiment.

Eye landmarks and eye state were obtained using machine learning first then manually checked frame by frame to correct to ensure high quality. Details can be found in Section 3.2 in [3].

As for the ground truth of mental state, four load types and the associated load level were labelled based on the task design, verified by subjective ratings, available performance score and task duration. Details can be found in Figure 3 in [1].

Usage Notes

- The users should fit an ellipse to the pupil landmarks to obtain pupil size in pixels.

- Eye length of the participants measured by a ruler in cm can be used together with the eye length in the eye video measured by the two eye corner landmarks in pixels to estimate pupil size in cm.

- When pupil does not exist in eye state 1-4 and pupil size estimation is not accurate in eye state 5, pupil size should be interpolated.

- Blink can be obtained from eye state depending on your definition of the blink. For example, if it is fully closed eye, then eye state 1 is blink. If it is when the pupil is completed occluded, then eye state 1-4 are blink.

- The time interval in the timestamp is not uniformly equal. Interpolation and resampling may be needed to have equal time interval and have desired frequency.

Acknowledgements

This data was produced by University of New South Wales-Sydney Australia under Army Research Office (ARO) Award Number W911NF1920330. ARO, as the Federal awarding agency, reserves a royalty-free, nonexclusive and irrevocable right to reproduce, publish, or otherwise use this data for Federal purposes, and to authorize others to do so in accordance with 2 CFR 200.315(b).

References

[1] S. Chen, J. Epps, F. Paas, “Pupillometric and blink measures of diverse task loads: Implications for working memory models”, British Journal of Educational Psychology, 2022.

[2] https://pupil-labs.com/

[3] S. Chen, J. Epps. " A High-Quality Landmarked Infrared Eye Video Dataset (IREye4Task): Eye Behaviors, Insights and Benchmarks for Wearable Mental State Analysis ", IEEE Transactions on Affective Computing, 2023.

[4] S. Chen and J. Epps, "Task Load Estimation from Multimodal Head-Worn Sensors Using Event Sequence Features," in IEEE Transactions on Affective Computing, vol. 12, no. 3, pp. 622-635, 1 July-Sept. 2021.

If you use this dataset, please cite the following paper:

S. Chen, J. Epps. "A High-Quality Landmarked Infrared Eye Video Dataset (IREye4Task): Eye Behaviors, Insights and Benchmarks for Wearable Mental State Analysis ", IEEE Transactions on Affective Computing, 2023.

Dataset Files

- IREye4Task_public9-1.zip (2.59 GB)

- IREye4Task_public9-2.zip (2.01 GB)

- IREye4Task_public9-3.zip (2.15 GB)

- IREye4Task_public9-4.zip (2.3 GB)

- IREye4Task_public9-5.zip (2.28 GB)

- IREye4Task_public9-6.zip (2.3 GB)

- IREye4Task_public9-7.zip (2.25 GB)

- IREye4Task_public9-8.zip (2.28 GB)

- IREye4Task_public9-9.zip (2.4 GB)