TST Intake Monitoring dataset v2

- Citation Author(s):

- Submitted by:

- Susanna Spinsante

- Last updated:

- DOI:

- 10.21227/H2BC7W

- Data Format:

- Links:

418 views

418 views

- Categories:

- Keywords:

Abstract

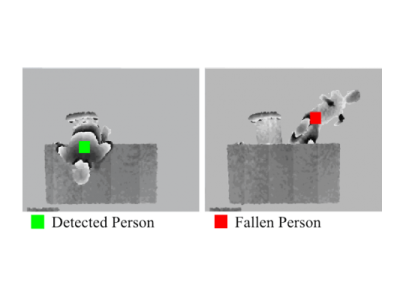

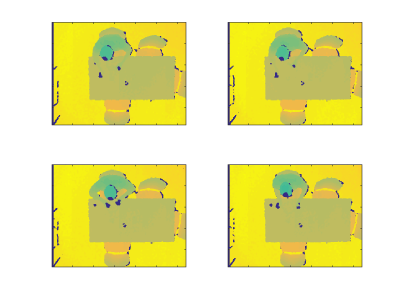

The dataset contains depth frames collected using Microsoft Kinect v1 during the execution of food and drink intake movements.

Instructions:

The dataset is composed by the executions of food and drink intake actions performed by 20 young actors.

Each actor repeated each action 3 times, generating a total number of 60 sequences. The repetitions involve different movements:

- repetition 1: eat a snack using the hand and drink water from a glass (Test 1,4,7,10,.. ,58);

- repetition 2: eat a soup with a spoon and pour/drink water (Test 2,5,8,11,.. ,59);

- repetition 3: use knife and fork for the main meal and finally wiping the mouth with a napkin (Test 3,6,9,12,..,60).

For each sequence, the following data are available:

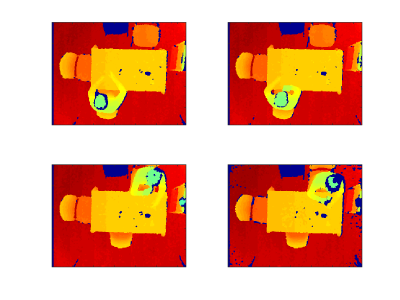

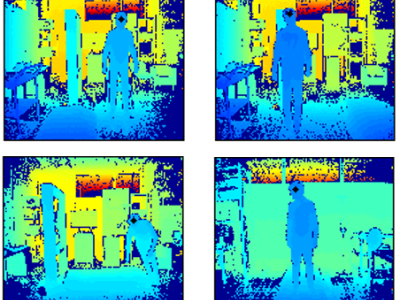

- depth frames, resolution of 320x240, captured by Kinect v1 in top-view configuration;

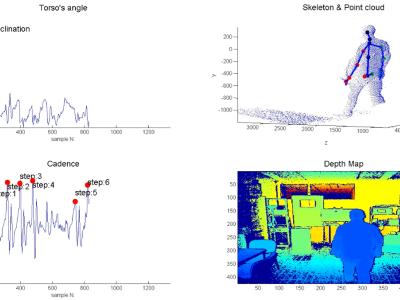

- coordinates of nodes computed by three unsupervised algorithms (SOM, SOM_Ex, GNG) and coordinates of ground-truth nodes (only for hands and head, manually identified): http://www.tlc.dii.univpm.it/dbkinect/FoodIntake_vr2/LoadNets_And_GT_vr2.zip

This code can be used to read data with MATLAB, and to convert the depth frame from pixel domain to the point cloud domain:

http://www.tlc.dii.univpm.it/dbkinect/FoodIntake_vr2/FromDepth2PC.zip

If you use the dataset, please cite the following paper:

S. Gasparrini, E. Cippitelli, E. Gambi, S. Spinsante and F. Florez-Revuelta, “Performance Analysis of Self-Organising Neural Networks Tracking Algorithms for Intake Monitoring Using Kinect,” 1st IET International Conference on Technologies for Active and Assisted Living (TechAAL), 6th November 2015, Kingston upon Thames (UK).