ExoNet: Egocentric Images of Walking Environments

- Citation Author(s):

-

William McNally (University of Waterloo)Alexander Wong (University of Waterloo)

- Submitted by:

- Brokoslaw Laschowski

- Last updated:

- DOI:

- 10.21227/rz46-2n31

- Links:

6263 views

6263 views

- Categories:

- Keywords:

Abstract

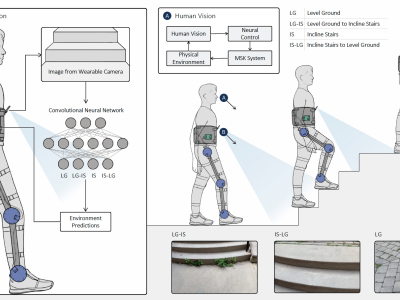

Egocentric vision is important for environment-adaptive control of humans and robots. Here we developed ExoNet, the largest open-source dataset of wearable camera images of real-world walking environments. The dataset contains over 5.6 million RGB images of indoor and outdoor environments, which were collected during summer, fall, and winter seasons. Over 923,000 images were human-annotated using a 12-class hierarchical labelling architecture. ExoNet serves as a communal platform to develop, optimize, and compare new deep learning models for egocentric visual perception, with applications in robotics and neuroscience.

Reference:

Laschowski B, McNally W, Wong A, and McPhee J. (2022). Environment classification for robotic leg prostheses and exoskeletons using deep convolutional neural networks. Frontiers in Neurorobotics. DOI: 10.3389/fnbot.2021.730965.

Instructions:

*Details on the ExoNet database are provided in the reference above. Please email Dr. Laschowski (blaschow@uwaterloo.ca) for any additional questions and/or technical assistance.