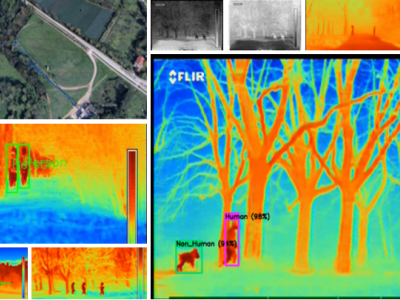

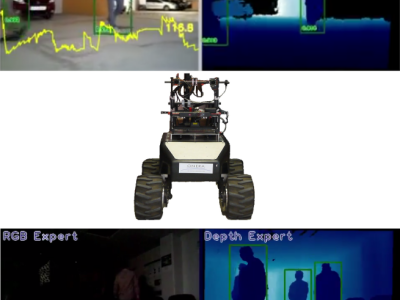

This dataset presents a synthetic thermal imaging dataset for Person Detection in Intrusion Warning Systems (PDIWS). The dataset consists of a training set with 2000 images and a test set with 500 images. Each image is synthesized by compounding a subject (intruder) with a background using the modified Poisson image editing method. There are 50 different backgrounds and nearly 1000 subjects divided into five classes according to five human poses: creeping, crawling, stooping, climbing and other. The presence of the intruder will be confirmed if the first four poses are detected.

- Categories: