Translucent Object Dataset

- Citation Author(s):

-

Sofia Nedorosleva (Santa Clara University)Maria Kyrarini (Santa Clara University)

- Submitted by:

- Maria Kyrarini

- Last updated:

- DOI:

- 10.21227/ya33-da48

193 views

193 views

- Categories:

- Keywords:

Abstract

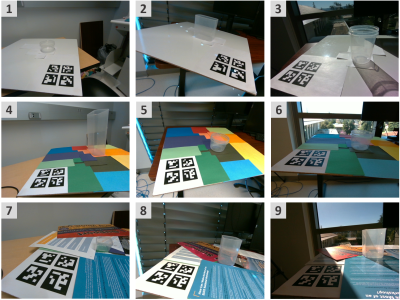

Humanoid robots are anticipated to play integral roles in domestic settings, aiding individuals with everyday tasks. Given the prevalence of translucent storage containers in households, characterized by their practicality and transparency, it becomes imperative to equip humanoid robots with the capability to localize and manipulate these objects accurately. Consequently, 6D pose estimation of objects is a crucial area of research to advance robotic manipulation. However, most existing 6D pose estimation methods and datasets predominantly focus on opaque objects, leaving a gap in research concerning translucent objects. We introduce a novel translucent object dataset with 6D pose and bounding box annotations tailored for robotic manipulation. The dataset includes six classes of translucent container objects in diverse environments and lighting conditions. Additionally, we introduce an automated ground truth annotation method that can be easily replicated and requires only RGB input.

Instructions:

The Dataset is designed to facilitate research in 6D pose estimation, particularly focusing on translucent containers suitable for storing cooking-related items. The dataset adheres to the widely used BOP (Benchmark for 6D Object Pose Estimation) format, ensuring compatibility and ease of integration with existing frameworks. Each scene within the dataset comprises RGB images, depth masks, corresponding 3D models, and annotations that include 6D pose and bounding box information. The dataset includes six classes, all of which are translucent containers designed for cooking-related and storage applications. These containers are categorized into two primary types based on their base shape; circular and rectangular. The RGB images used in the dataset are 640x480 pixels. A total of 54 scenes were captured both for training and testing purposes. Each scene comprises an average of 500 frames (images), capturing diverse perspectives and viewpoints of each object.