Datasets

Standard Dataset

BC-AC Multimodal Emotional Speech Dataset (Partial Release)

- Citation Author(s):

- Submitted by:

- SHU ZHAO

- Last updated:

- Mon, 04/07/2025 - 08:42

- DOI:

- 10.21227/s7b2-c792

- Data Format:

- Research Article Link:

- License:

100 Views

100 Views- Categories:

- Keywords:

Abstract

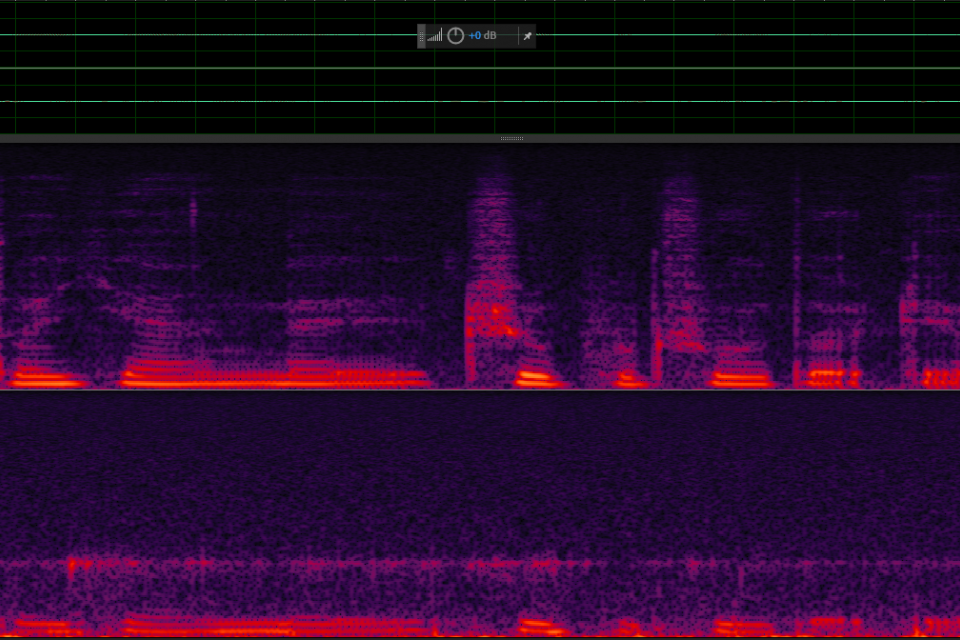

To support research on multimodal speech emotion recognition (SER), we developed a dual-channel emotional speech database featuring synchronized recordings of bone-conducted (BC) and air-conducted (AC) speech. The recordings were conducted in a professionally treated anechoic chamber with 100 gender-balanced volunteers. AC speech was captured via a digital microphone on the left channel, while BC speech was recorded from an in-ear BC microphone on the right channel, both at a 44.1 kHz sampling rate to ensure high-fidelity audio.

Each participant completed a 60-minute session consisting of two phases: emotion induction and expression. Participants first watched emotion-inducing video clips and reported their emotional state using a discrete label and a 0–5 intensity scale. They then read predefined prompts while maintaining the induced emotion, generating labeled utterances. The speech material included 480 sentences (120 per emotion: angry, happy, neutral, sad) selected from a standardized iFLYTEK corpus. Emotional and neutral utterances were alternated to minimize cognitive fatigue, and the material was evenly divided into four subsets to maintain emotional balance. Manual perceptual evaluation yielded recognition accuracies of 93.56% for emotional and 82.38% for neutral utterances, confirming data quality. After segmentation, the final dataset contains 24,191 labeled utterances.

BC-AC Multimodal Emotional Speech Dataset (Partial Release)

Overview

This is a partial release of a dual-channel emotional speech dataset designed to support research in multimodal speech emotion recognition (SER). The dataset includes synchronously recorded bone-conducted (BC) and air-conducted (AC) emotional speech signals. The current release contains data from two participants (labeled `s1` and `s2`) and includes only emotionally labeled utterances.

Contents

Each speaker's folder (`s1/`, `s2/`) contains:

- Paired BC and AC speech recordings

- WAV audio files, sampled at 44.1 kHz

- File names encode the emotion label

Speaker Details

| Speaker | Number of Emotionally Labeled Utterances | Emotions Included|

|------------|------------------------------------------------------------|---------------------------------|

| `s1` | 265 | Angry, Happy, Neutral, Sad|

| `s2` | 229 | Angry, Happy, Neutral, Sad|

File Naming Convention

Each audio file name follows this pattern:

cut_[utterance_id]_[emotion].wav

- `emotion`: One of `angry`, `happy`, `neutral`, or `sad`

- `utterance_id`: Unique identifier per speaker

Data Structure

root_directory/AirBone_audioCut_Partial Released/

├── s1/

│ ├──cut-00001_sad.wav

│ ├──cut-00002_sad.wav

│ ├── ...

├── s2/

│ ├── cut-00269_sad.wav

│ ├── cut-00270_sad.wav

│ ├── ...

```

Each emotion has a `.wav` files for both BC and AC modalities for the same utterance index (where applicable).

Usage Notes

- Audio files can be directly loaded using any standard speech processing library (e.g., `librosa`, `scipy.io.wavfile`, `torchaudio`).

- Emotion labels are embedded in the file names and can be parsed for supervised learning tasks.

- This subset is provided for preliminary analysis or benchmarking and does not reflect the full dataset size.

Citation

If you use this dataset in your work, please cite the following:

Q. Wang, M. Wang, Y. Yang, and X. Zhang, “Multi-modal emotion recognition using EEG and speech signals,” Comput. Biol. Med., vol. 149, Art. no. 105907, 2022.

Contact

Later the dataset will be publicly available for researchers globally.

Documentation

| Attachment | Size |

|---|---|

| 78.86 KB |