Event-based vision

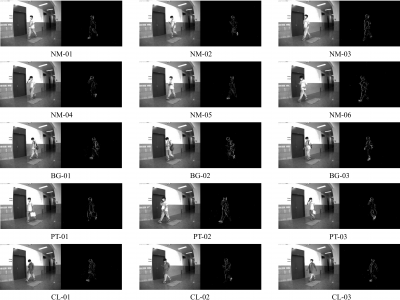

This is the first multi-view, semi-indoor gait dataset captured with the DAVIS346 event camera. The dataset comprises 6,150 sequences, capturing 41 subjects from five different view angles under two lighting conditions. Specifically, for each lighting condition and view angle, there are six sequences representing normal walking (NM), three sequences representing walking with a backpack (BG), three sequences representing walking with a portable bag (PT), and three sequences representing walking while wearing a coat (CL).

- Categories:

106 Views

106 Views

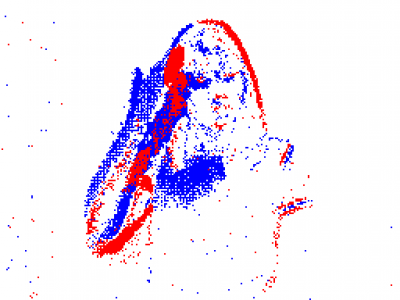

This dataset, referred to as LIED (Light Interference Event Dataset), is showcased in the article titled 'Identifying Light Interference in Event-Based Vision'. We proposed the LIED, it has three categories of light interference, including strobe light sources, non-strobe light sources and scattered or reflected light. Moreover, to make the datasets contain more realistic scenarios, the datasets include the dynamic objects and the situation of camera static and the camera moving. LIED was recorded by the DAVIS346 sensor. It provides both frame and events with the resolution of 346 * 260.

- Categories:

96 Views

96 ViewsN-WLASL dataset is a synthetic event-based dataset comprising 21,093 samples across 2,000 glosses. The dataset was collected using an event camera to shoot toward an LCD monitor. The monitor plays video frames from WLASL, the largest public word-level American Sign Language dataset. We use the event camera DAVIS346 with a resolution of 346x260 to record the display. The video resolution of WLASL is 256x256 and the frame rate is 25Hz. To ensure accurate recording of the display, we have implemented three video pre-processing procedures using the python-opencv and dv packages in Python.

- Categories:

477 Views

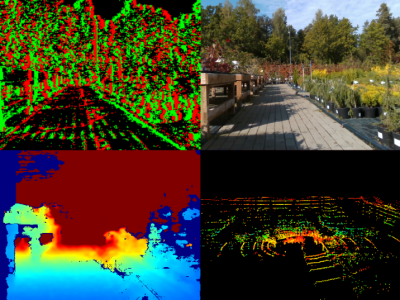

477 ViewsA new generation of computer vision, namely event-based or neuromorphic vision, provides a new paradigm for capturing visual data and the way such data is processed. Event-based vision is a state-of-art technology of robot vision. It is particularly promising for use in both mobile robots and drones for visual navigation tasks. Due to a highly novel type of visual sensors used in event-based vision, only a few datasets aimed at visual navigation tasks are publicly available.

- Categories:

1210 Views

1210 ViewsA new generation of computer vision, namely event-based or neuromorphic vision, provides a new paradigm for capturing visual data and the way such data is processed. Event-based vision is a state-of-art technology of robot vision. It is particularly promising for use in both mobile robots and drones for visual navigation tasks. Due to a highly novel type of visual sensors used in event-based vision, only a few datasets aimed at visual navigation tasks are publicly available.

- Categories:

1376 Views

1376 Views