Datasets

Standard Dataset

N-WLASL

- Citation Author(s):

- Submitted by:

- Qi Shi

- Last updated:

- Wed, 06/21/2023 - 20:47

- DOI:

- 10.21227/x23b-d084

- License:

486 Views

486 Views- Categories:

- Keywords:

Abstract

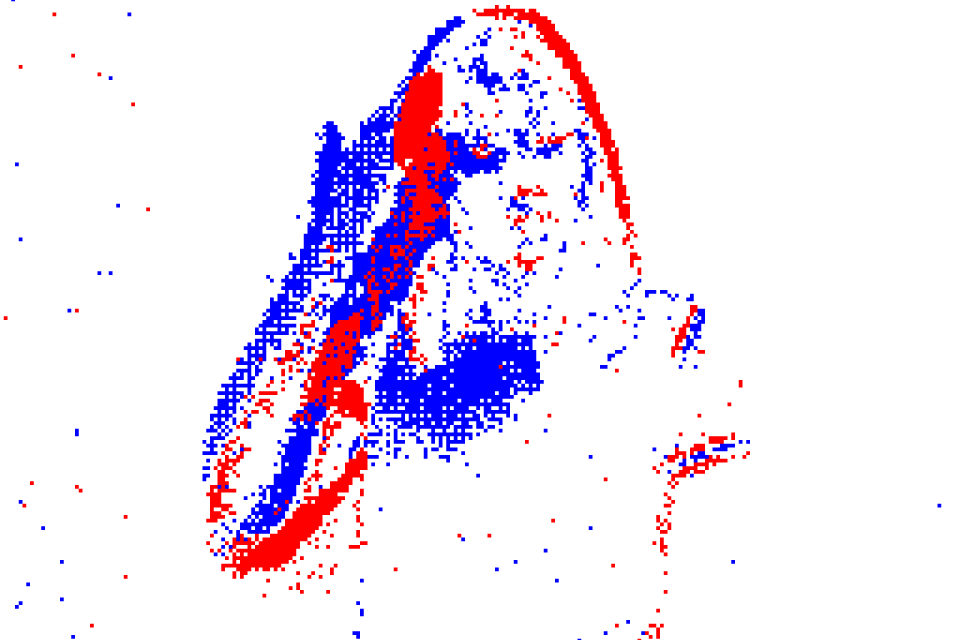

N-WLASL dataset is a synthetic event-based dataset comprising 21,093 samples across 2,000 glosses. The dataset was collected using an event camera to shoot toward an LCD monitor. The monitor plays video frames from WLASL, the largest public word-level American Sign Language dataset. We use the event camera DAVIS346 with a resolution of 346x260 to record the display. The video resolution of WLASL is 256x256 and the frame rate is 25Hz. To ensure accurate recording of the display, we have implemented three video pre-processing procedures using the python-opencv and dv packages in Python. These procedures are as follows:

Add black paddings and red borders around video frames to increase their size to 346x260.

Center the video frames on the monitor display after scaling them to 1428x1080 in the original aspect ratio.

Display all videos sequentially at the original frame rate of 25Hz and pause the first frame of each video for 500ms to prevent event bursts brought on by swapping videos.

Requirements:

- pandas

- dv

- h5py

- opencv-python

- json

- torch

Here is the structure of *.aedat4:

- *.aedat4

- events

-- x

-- y

-- timestamp

-- polarity

- frames

-- timestamp

-- image

Only the event data is used for experiments. Utilize the following scripts to transfer the event data (*.aedat4) to the voxel grid (*.h5) in a fixed time window length.

"nslt_2000.json" is the class file for splitting the whole dataset into train, validation, and test set.

"processing.py" is used for cropping events in time and transferring them into the voxel grid.