A Large Scale Nepali Text Corpus

- Citation Author(s):

-

Rabindra Lamsal (Artificial Intelligence & Data Science Lab, SC&SS, JNU)

- Submitted by:

- Rabindra Lamsal

- Last updated:

- DOI:

- 10.21227/jxrd-d245

- Data Format:

- Links:

6767 views

6767 views

- Categories:

- Keywords:

Abstract

Considering the ongoing works in Natural Language Processing (NLP) with the Nepali language, it is evident that the use of Artificial Intelligence and NLP on this Devanagari script has still a long way to go. The Nepali language is complex in itself and requires multi-dimensional approaches for pre-processing the unstructured text and training the machines to comprehend the language competently. There seemed a need for a comprehensive Nepali language text corpus containing texts from domains such as News, Finance, Sports, Entertainment, Health, Literature, Technology. Therefore to address this necessity, a Nepali text corpus of over 90 million running words (6.5+ million sentences) was compiled.

And then, 300-dimensional word vectors for more than 0.5 million Nepali words/phrases were computed. The word vectors can be downloaded from here: http://dx.doi.org/10.21227/dz6s-my90.

The Work

The collection of the texts for building the corpus was done by scrapping the news portals available freely in the public domain. Any random website or blog could not be scrapped because of the variability in wordings for the same word. Therefore, it was necessary to bring some consistency within the collected texts. It was observed that the authority Nepali news portals such as Kantipur, Nagariknews, Setopati, etc. were among the ones retiring this problem by a significant margin. These portals were using the most common wording while scripting the articles and had relatively lesser grammatical errors & ambiguity within the language structure.

The news portals scrapped for designing the text corpus included: Ekantipur, Nagariknews, Setopati, Onlinekhabar, Karobardaily, Ratopati, News24nepal, Reportersnepal, Baahrakhari, Hamrokhelkud and Aakarpost. The articles posted on these portals are copyright to the respective publishers. The compiled text corpus should only be used for non-commercial research projects.

Designing the corpus:

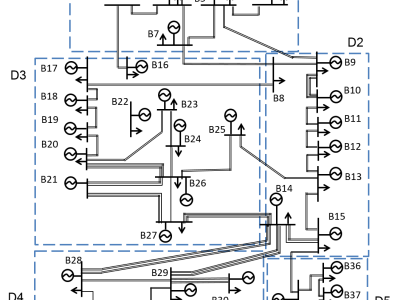

A web crawler was written in Python. The crawler was responsible for downloading the source files and dumping them on the local machine in a separate folder for different websites.

The source files in a folder were merged as blocks for further processing. A block file contained 1000 article pages. Once a block is created, the following items were removed from the file: HTML elements, English alphabets and numbers (a-zA-Z0-9), symbols and unnecessary spaces.

Stemming

Finding root words in the Nepali language is a complex task. However, this can be achieved with a satisfactory outcome by slicing the trailing Devanagari characters. For this purpose, a dictionary of trailing characters should be maintained, and each word (tokenized) in the corpus obtained from the above pre-processing phase should be checked for the presence of the trailing characters.

The NLTK library does not support the Nepali language out-of-the-box. However, a small workaround can be done to use the NLTK tokenize function on the Nepali text corpus. In Nepali, the sentences can be found separated by any of these characters: `purnabiram', `question mark', `exclamation sign'. These characters can be replaced with `dot" (.) to make NLTK tokenize the text corpus at both sentence and word level.

Word Vectors

The text corpus was used to find the most frequently used words (stop words) in the Nepali language. The top 1500 most frequent words were extracted. The tokenized words from the corpus which were present in the list of stop words were removed. The pre-processed corpus after stemming and stop words removal was finally ready to be used for the computation of word vectors.

After pre-processing, the text corpus contained 6.5+ million sentences and 90+ million words. The 300-dimensional word vectors (Word2Vec) were computed using Gensim with following properties: Architecture: Continuous - BOW; Training algorithm: Negative sampling = 15; Context (window) size: 10; Token minimum count: 2. The designed Word2Vec model (filetype: txt) for the Nepali language is of 1.8GB with encoding done in UTF-8. The model can be loaded with the binary option set to false.

Instructions:

Here's a quick way to load the .txt file in your favourite IDE.

filename = 'compiled.txt'

file = open(filename, encoding="utf-8")

text = file.read()

thank you dai for this amazing dataset.