DARai: Daily Activity Recordings for AI and ML applications

- Citation Author(s):

-

Ghazal Kaviani (Georgia Institute of Technology)Yavuz Yarici (Georgia Institute of Technology)Mohit Prabhushankar (Georgia Institute of Technology)Ghassan AlRegib (Georgia Institute of Technology)Mashhour Solh (Amazon)Ameya Patil (Amazon)

- Submitted by:

- Ghassan AlRegib

- Last updated:

- DOI:

- 10.21227/ecnr-hy49

888 views

888 views

- Categories:

- Keywords:

Abstract

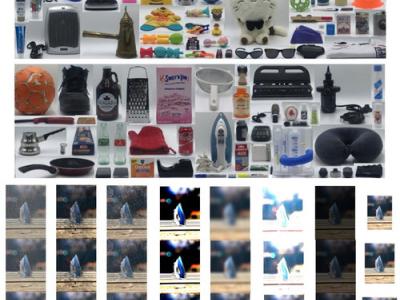

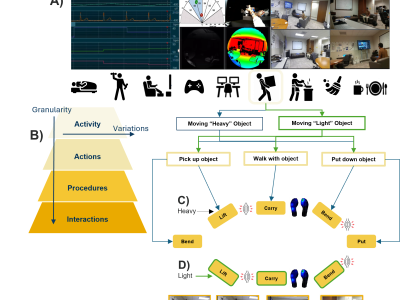

The DARai dataset is a comprehensive multimodal multi-view collection designed to capture daily activities in diverse indoor environments. This dataset incorporates 20 heterogeneous modalities, including environmental sensors, biomechanical measures, and physiological signals, providing a detailed view of human interactions with their surroundings. The recorded activities cover a wide range, such as office work, household chores, personal care, and leisure activities, all set within realistic contexts. The structure of DARai features a hierarchical annotation scheme that classifies human activities into multiple levels: activities, actions, procedures, and interactions. This taxonomy aims to facilitate a thorough understanding of human behavior by capturing the variations and patterns inherent in everyday activities at different levels of granularity. DARai is intended to support research in fields such as activity recognition, contextual computing, health monitoring, and human-machine collaboration. By providing detailed, contextual, and diverse data, this dataset aims to enhance the understanding of human behavior in naturalistic settings and contribute to the development of smart technology applications.

More information : https://alregib.ece.gatech.edu/software-and-datasets/darai-daily-activity-recordings-for-artificial-intelligence-and-machine-learning/

Access Codes: https://github.com/olivesgatech/DARai

Instructions:

Our dataset structure are based on data modalities as the top folder and under each modality we have Activity labels (level 1).

There will be some super group of modalities which contains at least 2 data modality folder. For some group such as bio monitors we are providing all 4 data modality under these group.

Local Dataset Path/

└── Modality/

└── Activity Label /

└── View/

└── data samples

You can download each group of modalities separatly and use them in your machine learning and deep learning pipeline.

Reassemble the zip file for Depth and IR data with following command in linux based system:

cat part_1 part_3 > yourfile_reassembled.zip

Dataset Files

- IMU.zip (Size: 35.41 MB)

- Gaze.zip (Size: 77.22 MB)

- BioMonitor.zip (Size: 1.21 GB)

- Insole Pressure.zip (Size: 5.33 GB)

- Environment.zip (Size: 304.32 KB)

- Audio.zip (Size: 18.14 MB)

- Forearm EMG.zip (Size: 513.78 MB)

- Stationary_IMU.zip (Size: 19.21 MB)

- radar.zip (Size: 4.08 GB)

- Depth_Confidence.zip (Size: 53.13 GB)

- RGB_pt1_compressed.zip (Size: 269.58 GB)

- RGB_pt2_compressed.zip (Size: 109.44 GB)

- Depth_pt_1.zip (Size: 199 GB)

- Depth_pt_2.zip (Size: 199 GB)

- Depth_pt_3.zip (Size: 157.42 GB)

- hierarchy_labels.zip (Size: 289.93 KB)