Data for a high-throughput method to deliver targeted optogenetic stimulation to moving C. elegans populations

- Citation Author(s):

-

Mochi Liu (Princeton University)

- Submitted by:

- Andrew Leifer

- Last updated:

- DOI:

- 10.21227/t6b0-bc36

- Data Format:

- Links:

389 views

389 views

- Categories:

Abstract

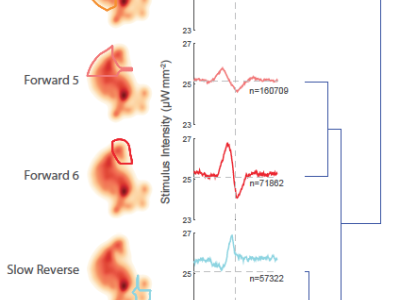

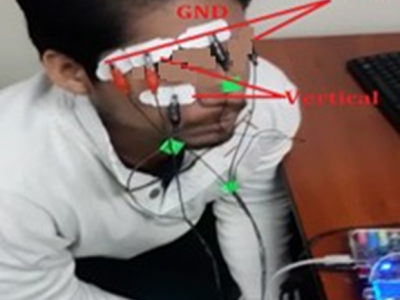

Here we present recordings from a new high-throughput instrument to optogenetically manipulate neural activity in moving C. elegans that accompany the manuscript, Liu, Kumar, Sharma and Leifer “A high throughput method to deliver targeted optogenetic stimulation to moving C. elegans populations.” forthcoming in PLOS Biology. Specifically, the new instrument enables simultaneous closed-loop light delivery to specified targets in populations of moving Caenorhabditis elegans. The instrument addresses three technical challenges: it delivers targeted illumination to specified regions of the animal's body such as its head or tail; it automatically delivers stimuli triggered upon the animal's behavior; and it achieves high throughput by targeting many animals simultaneously. The instrument was used to optogenetically probe the animal's behavioral response to competing mechanosensory stimuli in the the anterior and posterior soft touch receptor neurons. Details of the instrument can be found in the dissertation, Mochi Liu, “C. elegans Behaviors and Their Mechanosensory Drivers”, Princeton University, 2020. Associated analysis code is located in https://github.com/leiferlab/liu-closed-loop-code

Instructions:

This is the raw data corresponding to: Liu, Kumar, Sharma and Leifer, "A high-throughput method to deliver targeted optogenetic stimulation to moving C. elegans population" available at https://arxiv.org/abs/2109.05303 and forthcoming in PLOS Biology.

The code used to analyze this data is availabe on GitHub at https://github.com/leiferlab/liu-closed-loop-code.git

Accessing

This dataset is publicly hosted on IEEE DataParts. It is >300 GB of data containing many many individual image frames. We have bundled the data into one large .tar bundle. Download the .tar bundle and extract before use. Consider using an AWS client to download the bundle instead of your web browser as we have heard of reports that download such large files over the browser can be problematic.

Post-processing

This dataset as-is includes only raw camera and other output of the real-time instrument used to optogenetically activate the animal and record its motion. To extract final tracks, final centerlines, final velocity etc, these raw outputs must be processed.

Post-processing can be done by running the /ProcessDateDirectory.m MATLAB script from https://github.com/leiferlab/liu-closed-loop-code.git. Note post processing was optimized to run in parallel on a high performance computing cluster. It is computationally intensive and also requires an egregious amount of RAM.

Repository Directory Structure

Recordings from the instrument are organized into directories by date, which we call "Date directories."

Each experiment is it's own timestamped folder within a date directory, and it contains the following files:

camera_distortion.pngcontains camera spatial calibration information in the image metadataCameraFrames.mkvis the raw camera images compressed with H.265labview_parameters.csvis the settings used by the instrument in the real-time experimentlabview_tracks.matcontains the real-time tracking data in a MATLAB readable HDF5 formatprojector_to_camera_distortion.pngcontains the spatial calibration information that maps projector pixel space into camera pixel spacetags.txtcontains tagged information for the experiment and is used to organize and select experiments for analysistimestamps.matcontains timing information saved during the real-time experiments, including closed-loop lag.ConvertedProjectorFramesfolder contains png compressed stimulus images converted to the camera's frame of reference.

Naming convention for individual recordings

A typical folder is 210624_RunRailsTriggeredByTurning_Sandeep_AML67_10ulRet_red

20210624- Date the dataset was collected in formatYYYYMMDD.RunRailsTriggeredByTurning- Experiment type describes the type of experiment. For example this experiment was performed in closed loop triggered on turning. Open loop experiments are called "RunFullWormRails" experiments for historical reasons.Sandeep- Name of the experimenterAML67- C. elegans strain name. Note strain AML470 corresponds to internal strain name "AKS_483.7.e".10ulRet- Concentration of all-trans-retinal usedred- LED color used to stimulate. Always red for this manuscript.

Regenerating figures

Once post processing has been run, figures from the mansucript can then be generated using scripts in https://github.com/leiferlab/liu-closed-loop-code.git

Please refer to instructions_to_generate_figures.csv for instructions on which Matlab script to run to generate each specific figure.