Tracking Neurons in a Moving and Deforming Brain Dataset

- Citation Author(s):

- Submitted by:

- Andrew Leifer

- Last updated:

- DOI:

- 10.21227/H2901H

- Data Format:

- Links:

2996 views

2996 views

- Categories:

- Keywords:

Abstract

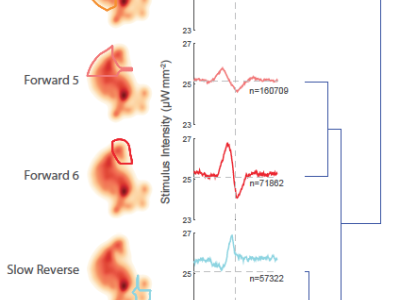

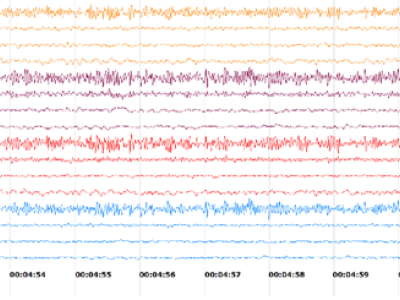

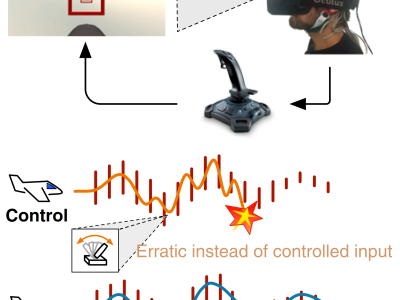

Advances in optical neuroimaging techniques now allow neural activity to be recorded with cellular resolution in awake and behaving animals. Brain motion in these recordings pose a unique challenge. The location of individual neurons must be tracked in 3D over time to accurately extract single neuron activity traces. Recordings from small invertebrates like C. elegans are especially challenging because they undergo very large brain motion and deformation during animal movement. Here we present a dataset to accompany an automated computer vision pipeline we've developed to reliably track populations of neurons with single neuron resolution in the brain of a freely moving C. elegans undergoing large motion and deformation. Two calcium imaging recordings from freely moving C. elegans are provided, Worm 1 and Worm 2, corresponding to recordings discussed in our corresponding publication: Nguyen JP, Linder AN, Plummer GS, Shaevitz JW, Leifer AM (2017) Automatically tracking neurons in a moving and deforming brain. PLoS Comput Biol 13(5): e1005517. https://doi.org/10.1371/journal.pcbi.1005517 Worm 1 contains hand-tracked annotations of individual neuron identites for comparison to the automated algorithm. When applied to Worm 1, our algorithm consistently finds more neurons more quickly than manual or semi-automated approaches. A recording of Worm 2 is also provided that had never been analyzed by hand. When our analysis pipeline is applied to that 8 minute calcium imaging recording we identify and track 156 neurons during animal behavior.

Instructions:

This folder contains datasets that accompany the publication:

Nguyen JP, Linder AN, Plummer GS, Shaevitz JW, Leifer AM (2017) Automatically tracking neurons in a moving and deforming brain. PLoS Comput Biol 13(5): e1005517. https://doi.org/10.1371/journal.pcbi.1005517

and correspond to the analysis software located at: https://github.com/leiferlab/NeRVEclustering.git

The data for each of Worm consists of 3 video streams, each video stream recorded open loop on different clocks. The videos can be synced using the timing of a camera flash. The two datasets shown in the paper are present. Worm 1 has a shorter recording, and includes manually annotated data neuron locations as well as automated points. Worm 2 is a longer recording.

How to Download

The dataset is available two ways: as a large all-in-one 0.3 TB tarball available for download via the web interface; and as a set of individual files that are avalable for browsing or downloading via Amazon S3.

Quick Summary

The Demo Folder has 5 demo codes that give a flavor for each step of the analysis. The analysis was originally designed to run on a computing cluster, and may not run well on local machines. The demo code, in contrast, is desgined to run locally. Each demo code will take some files in the ouputFiles folder and move them into the main data directory to do a small part of the analysis. The python code in the repo is not needed for these demos. The demos skip part 0, which is used for timing alignment of videos.

Details

Raw Videos

These are the raw video inputs to the analysis pipeline.

sCMOS_Frames_U16_1200x600.dat - Binary image file for HiMag images. Stream of 1200 x 600 uint16 images. The images created by two image channels projected onto a sCMOS camera. The first half of the image (1-600) is the RFP image, the second half (601-1200) is the gCAMP6s image. Both fluorophores are expressed panneuronally in the nucleus. Images are taken at 200Hz, and the worm is scanned with a 3 Hz triangle wave.

AVI files with behavior - Low magnification dark field video.

AVI files with fluorescence - Low magnification fluorescent images.

*HUDS versions of the avi have additional data on the video frame, such as the frame number and program status.

Raw Text files

These are the additional inputs to the analysis pipeline. These files contain timing information for every frame of each of the video feeds. They also contain information about the positions of the stage and the objective. This information, along with the videos themselves, are used to align the timing for all of the videos. Several camera flashes are used throughout the recording.

labJackData.txt - Raw outputs from LabVIEW for the stage, the piezo that drives the objective, the sCMOS camera, and the function generator (FG), taken at 1kHz. The objective is mounted on a piezo stage that is driven by the output voltage of the function generator. The 1kHz clock acts as the timing for each event.

Columns:

FuncGen - Programmed output from FG, a triangle wave at 6 Hz.

Voltage - Actual FG output

Z Sensor - voltage from piezo, which controls Z position of objective.

FxnGen Sync- Trigger output from FG, triggers at the center of the triangle.

Camera Trigger- Voltage from HiMag camera, down sweeps indicate a frame has been grabbed from HiMag Camera

Frame Count - Number of frames that have been grabbed from HiMag Camera. Not all frames that are grabbed are saved, and the saved frames will be indicated in the saved frames field in the next text file.

Stage X - X position from stage

Stage Y - Y position from stage

CameraFrameData.txt - Metadata from each grabbed frame for HiMag images, saved in LabVIEW. The timing for each of these frames can be pulled from the labJackData.txt.

Columns:

Total Frames - Total number of grabbed frames

Saved Frames - The current save index, not all grabbed frames are saved. If this increments, the frame has been saved.

DC offset - This is the signal sent to the FG to translate center of the triangle wave to keep the center of the worm in the middle of the wave.

Image STdev - standard deviation of all pixel intensities in each image. (used for trackign in teh axial dimension.)

.yaml files - Data from each grabbed frame for both low mag .avi files

Lots of datafields here are anachronistic holdovers, but of main interest are the FrameNumber, Selapsed (seconds elapsed) and msRemElapsed (ms elapsed). These are parsed in order to determine the timing of each frame.

Processed mat files

These files contain processed output from the analysis pipeline at different steps.

STEP 0: Output of Timing alignment Code.

hiResData.mat - data for each frame of HiMag video. This contains the information about the imaging plane, position of the stage, timing, and which volume each frame belongs to.

Fields:

Z - z voltage from piezo for each frame, indicating the imaging plane.

frameTime - time of each frame after flash alignment in seconds.

stackIdx - number of the stack each frame belongs to. Each volume recorded is given an increasing number starting at 1 for the first volume. For example, the first 40 images will belong to stackIdx=1, then the next 40 will have stackIdx=2 etc etc…

imSTD - standard dev of each frame

xpos and ypos - stage position for each frame

flashLoc - index of the frame of each flash

*note some of these fields have an extra point at the end, just remove it to make everything the same size

*flashTrack.mat - 1xN vector where N is the number of frames in the corresponding video. The values of flashTrack are the mean of each image. It will show a clear peak when a flash is triggered. This can be used to align the videos.

*YAML.mat - 1xN vector where N is the number of frames in the corresponding video. Each element of the mcdf has all of the metadata for each frame of the video. Using this requires code from https://github.com/leiferlab/MindControlAccessUtils.git github repo.

alignments.mat - set of affine transformations between videos feeds. Each has a "tconcord" field that works

with matlab’s imwarp function.

Fields:

lowresFluor2BF- Alignment from low mag fluorescent video to low mag behavior video

S2AHiRes - Alignment from HiMag Red channel to HiMag green channel. This alignment is prior to cropping of the HiMag Red channel.

Hi2LowResF - Alignment from HiMag Red to low mag fluorescent video

STEP 1: WORM CENTERLINE DETECTION

initializeCLWorkspace.m (done locally for manual centerline initialization)

Python submission code:

submitWormAnalysisCenterline.py

Matlab analysis code:

clusterWormCenterline.m

File Outputs: CLstartworkspace.mat, initialized points and background images for darkfield images

CL_files folder, containing partial CL.mat files

BehaviorAnalysis folder, containing the centerline.mat file with XY coordinates for each image.

* due to poor image quality of dark field images, it may be necessary to use some of the code developed by AL to manually adjust centerlines

** worm 1 centerline was found using a different method, so STEP1 can be skipped for worm1.

STEP 2: STRAIGHTEN AND SEGMENTATION

Python submission code:

submitWormStraightening.py

Matlab analysis code:

clusterStraightenStart.m

clusterWormStraightening.m

File Outputs: startWorkspace.mat, initial workspace used for during straightening for all volumes

CLStraight* folder, folder containing all saved straightened tif files and results of segmentation.

STEP 3: NEURON REGISTRATION VECTOR ENCODING AND CLUSTERING

Python submission code:

submitWormAnalysisPipelineFull.py

Matlab analysis code:

clusterWormTracker.m

clusterWormTrackCompiler.m

File Outputs: TrackMatrixFolder, containing all registrations of sample volumes with reference volumes.

pointStats.mat, struccture containing all coordinates from all straightened volumes along with a trackIdx, the result of initial tracking of points.

STEP 4: ERROR CORRECTION

Python submission code:

submitWormAnalysisPipelineFull.py

Matlab analysis code:

clusterBotChecker.m

clusterBotCheckCompiler.

File Outputs: botCheckFolder, folder containing all coordinate guesses for all times, one mat file for each neuron.

pointStatsNew.mat, matfile containing the refined trackIdx after error correction.

STEP 5: SIGNAL EXTRACTION

Python submission code:

submitWormAnalysisPipelineFull.py

Matlab analysis code:

fiducialCropper3.m

File Output: heatData.mat, all signal results from extracting signal from the coordinates.

Simple dat file Viewer

HiMag fluorescent data files are stored as a stream of binary uint16. The gui "ScanBinaryImageStack" is a simple MATLAB program to view these images. The GUI can be found in the NeRVE git repo at https://github.com/leiferlab/NeRVEclustering.git. Feel free to add features. To view images, click the button "Select Folder", and select the .dat file. You can now use the slider in the gui or the arrow keys on your keyboard to view different images. You can change the step size to change how much each arrow push increments. You can also change the bounds of the slider away from the default of first frame/last frame. The save snapshot button saves a tiff of the current frame into the directory of the dat file. The size of the image must be specified in the image size fields. All .dat files included are 1200x600 pixels. The program also works if .avi files are selected. In this case, the size of the images is determined automatically.