Datasets

Open Access

Electroencephalogram (EEG) recordings obtained when simultaneously presenting audio stimulations

- Citation Author(s):

- Submitted by:

- Eric Plourde

- Last updated:

- Mon, 08/12/2019 - 11:24

- DOI:

- 10.21227/e90n-sa08

- License:

- Creative Commons Attribution

1498 Views

1498 Views- Categories:

- Keywords:

Abstract

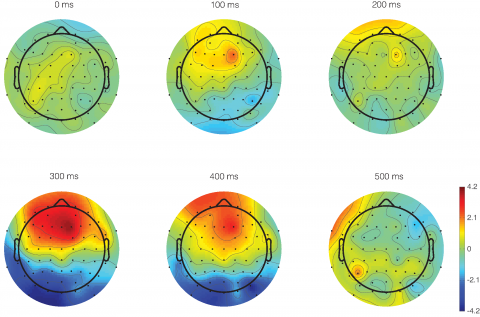

The dataset consists of EEG recordings obtained when subjects are listening to different utterances : a, i, u, bed, please, sad. A limited number of EEG recordings where also obtained when the three vowels were corrupted by white and babble noise at an SNR of 0dB. Recordings were performed on 8 healthy subjects.

Recordings were performed at the Centre de recherche du Centre hospitalier universitaire de Sherbrooke (CRCHUS), Sherbrooke (Quebec), Canada. The EEG recordings were performed using an actiCAP active electrode system Version I and II (Brain Products GmbH, Germany) that includes 64 Ag/AgCl electrodes. The signal was amplified with BrainAmp MR amplifiers and recorded using the Vision Recorder software. The electrodes were positioned using a standard 10-20 layout. Experiments were performed on 8 healthy subjects without any declared hearing impairment. Each session lasted approximately 90 minutes and was separated in 2 parts. The first part, lasting 30 minutes, consisted in installing the cap on the subject where an electroconductive gel was placed under each electrode to ensure a proper contact between the electrode and the scalp. The second part, which was the listening and EEG acquisition, lasted approximately 60 minutes. The subjects then had to stay still with eyes closed while avoiding any facial movement or swallowing. They had to remain concentrated on the audio signals during the full length of the experiment. Audio signals were presented to the subjects through earphones while EEGs were recorded. During the experiment, each trial was repeated randomly at least 80 times. A stimulus was presented randomly within each trial which lasted approximately 9 seconds. A 2-minute pause was given after 5 minutes of trials where the subjects could relax and stretch. Once the EEG signals were acquired, they were resampled at 500 Hz and band-pass filtered between 0.1 Hz and 45 Hz in order to extract the frequency bands of interest for this study. EEG signals were then separated into 2-second intervals where the stimulus was presented at 0.5 second within each interval. If the signal amplitude exceeded a pre-defined 75 V limit, the trial was marked for rejection. A sample code is provided to read the dataset and generate ERPs. One needs first to run the epoch_data.m for the specific subject and then run the mean_data.m file in the ERP folder. EEGLab for Matlab is required.

Dataset Files

EEG.zip (3.00 GB)

Open Access dataset files are accessible to all logged in users. Don't have a login? Create a free IEEE account. IEEE Membership is not required.