Mixed Signals V2X Dataset

- Citation Author(s):

-

Katie LuoMinh-Quan DaoZhenzhen LiuMark CampbellWei-Lun ChaoKilian WeinbergerEzio MalisVincent FremontBharath HariharanMao ShanStewart WorrallJulie Stephany Berrio Perez

- Submitted by:

- Katie Luo

- Last updated:

- DOI:

- 10.21227/d5bg-cb07

- Links:

265 views

265 views

- Categories:

- Keywords:

Abstract

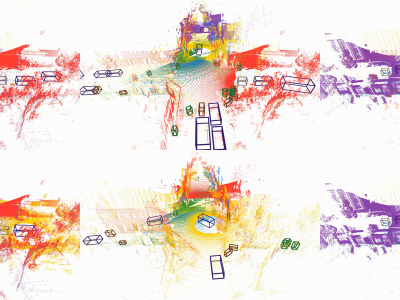

Vehicle-to-everything (V2X) collaborative perception has emerged as a promising solution to address the limitations of single-vehicle perception systems. However, existing V2X datasets are limited in scope, diversity, and quality. To address these gaps, we present Mixed Signals, a comprehensive V2X dataset featuring 45.1k point clouds and 240.6k bounding boxes collected from three connected autonomous vehicles (CAVs) equipped with two different types of LiDAR sensors, plus a roadside unit with dual LiDARs. Our dataset provides precisely aligned point clouds and bounding box annotations across 10 classes, ensuring reliable data for perception training. We provide detailed statistical analysis on the quality of our dataset and extensively benchmark existing V2X methods on it. Mixed Signals V2X Dataset is one of the highest quality, large-scale datasets publicly available for V2X perception research: https://mixedsignalsdataset.cs.cornell.edu/.

Instructions:

Please visit the Mixed Signals dataset webpage for detailed instructions on how to download and use the dataset. A devkit will be provided for convenience of use.

,.