Context-Aware Human Activity Recognition (CAHAR) Dataset

- Citation Author(s):

-

John Mitchell (University of Leeds)Abbas Dehghani-Sanij (University of Leeds)Sheng Xie (University of Leeds)Rory O'Connor (University of Leeds)

- Submitted by:

- John Mitchell

- Last updated:

- DOI:

- 10.21227/bwee-bv18

- Data Format:

498 views

498 views

- Categories:

- Keywords:

Abstract

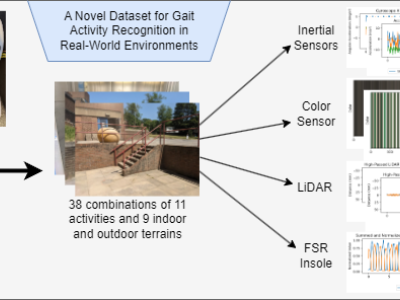

This dataset consists of inertial, force, color, and LiDAR data collected from a novel sensor system. The system comprises three Inertial Measurement Units (IMUs) positioned on the waist and atop each foot, a color sensor on each outer foot, a LiDAR on the back of each shank, and a custom Force-Sensing Resistor (FSR) insole featuring 13 FSRs in each shoe. 20 participants wore this sensor system whilst performing 38 combinations of 11 activities on 9 different terrains, totaling over 7.8 hours of data. In addition, participants 1 and 3 performed additional activity-terrain combinations which can be used as a test set, or for additional training data. The intention with this dataset is to enable researchers to develop classification techniques capable of identifying both the performed activity, and the terrain on which it was performed. These classification techniques will be instrumental in the adoption of remote, real-environment gait analysis with increased accuracy, reproducibility, and scope for diagnosis.

Instructions:

From each of the 20 participants, 85-86 activity files are captured per leg, with a less consistent amount from the waist, resulting in a dataset of 4865 activity files. The dataset contains 20 folders of text files with the format "XXYY.txt". The first 2 digits refer to the activity index found in the included metadata file, whilst the second 2 digits refer to the trial number for that activity. Walking trials were repeated twice, with the summary digits "01" for counterclockwise and "02" for clockwise turning. The exception to this was the gravel and paving slab activities, which were shorter, but repeated 3 times. Sit to stand, stand to sit, stair ascend, stair descend, ramp ascend, and ramp descend were each repeated 3 times, whilst standing, sitting, and the elevator activities contain only one trial each. The first 2 rows of data in each file contain readings left in the Arduino's buffer from before the new data recording began and should be removed.

For the leg subsystems, each file contains the following column headings: time elapsed in milliseconds, LiDAR distance in millimeters, 9-axis Inertial Measurement Unit (IMU) channels, unprocessed light value 'C', red (R), green (G), and blue (B) color values from 0 to 255, color temperature, processed light value 'Lux', and the 13 Force-Sensing Resistor (FSR) values in the range 0 to 1023. The waist sensor contains only the time elapsed and IMU channels in the same format. All text files have column names built, and all data is represented in the Comma-Separated Value (CSV) format.

Included in the dataset is a Jupyter notebook which performs the recommended preprocessing, naming, and cleaning to streamline the preprocessing phase of data analysis, however this can be bypassed if preferred as the data is unaltered in the uploaded dataset. Also included is a diagram of the FSR layout and numbering scheme, a metadata file stating the demographic data of each participant along with any deviations to the data collection procedure, and a Jupyter notebook which can generate animated ".gif" heatmaps of the insole data for a given activity. This information can also be found in the metadata file.

Great work!