video super-resolution quality assessment database (VSR-QAD)-3

- Citation Author(s):

-

Fei ZhouWei ShengZitao LuGuoping Qiu

- Submitted by:

- Fei Zhou

- Last updated:

- DOI:

- 10.21227/v7qr-sm72

45 views

45 views

- Categories:

- Keywords:

Abstract

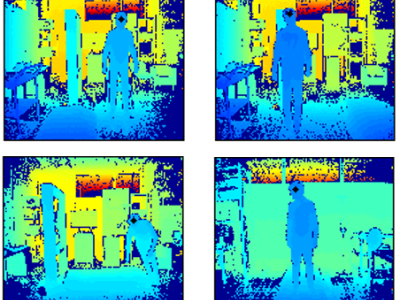

VSR-QAD-3Video super-resolution (SR) has important real world applications such as enhancing viewing experiences of legacy low-resolution videos on high resolution display devices. However, there are no visual quality assessment (VQA) models specifically designed for evaluating SR videos while such models are crucially important both for advancing video SR algorithms and for viewing quality assurance. Therefore, we establish a super-resolution video quality assessment database (VSR-QAD) for implementing super-resolution video quality assessment. Our VSR-QAD consists of 120 high quality high resolution reference videos. These videos were first spatially down-scaled by a factor of x2, x4, and x8, and then super-resolved back to their original resolutions by 10 representative SR algorithms to obtain 2,400 SR videos. Psychovisual experiments were carried out to acquire subjective quality labels for these SR videos. 190 subjects spending a total of approximately 400 hours to carry out both absolute rating and relative rating experiments. After aligning these relative and absolute scores and removing outliers, 2,260 SR videos are labeled with mean opinion scores (MOSs).

Instructions:

VSR-QAD-3Video super-resolution (SR) has important real world applications such as enhancing viewing experiences of legacy low-resolution videos on high resolution display devices. However, there are no visual quality assessment (VQA) models specifically designed for evaluating SR videos while such models are crucially important both for advancing video SR algorithms and for viewing quality assurance. Therefore, we establish a super-resolution video quality assessment database (VSR-QAD) for implementing super-resolution video quality assessment. Our VSR-QAD consists of 120 high quality high resolution reference videos. These videos were first spatially down-scaled by a factor of x2, x4, and x8, and then super-resolved back to their original resolutions by 10 representative SR algorithms to obtain 2,400 SR videos. Psychovisual experiments were carried out to acquire subjective quality labels for these SR videos. 190 subjects spending a total of approximately 400 hours to carry out both absolute rating and relative rating experiments. After aligning these relative and absolute scores and removing outliers, 2,260 SR videos are labeled with mean opinion scores (MOSs).