Sequential Storytelling Image Dataset (SSID)

- Citation Author(s):

-

Zainy M. Malakan

(Department of Computer Science and Software Engineering, The University of Western Australia, 35 Stirling Hwy, Crawley WA 6009, Australia)

Saeed Anwar ( Information and Computer Science, King Fahad University of Petroleum and Minerals, Dhahran 31261, Saudi Arabia) - Submitted by:

- Zainy Malakan

- Last updated:

- DOI:

- 10.21227/dbr9-dq51

- Data Format:

- Research Article Link:

2028 views

2028 views

- Categories:

- Keywords:

Abstract

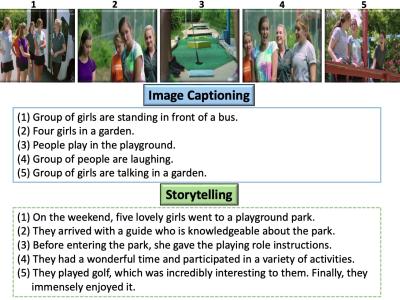

Visual storytelling refers to the manner of describing a set of images rather than a single image, also known as multi-image captioning. Visual Storytelling Task (VST) takes a set of images as input and aims to generate a coherent story relevant to the input images. In this dataset, we bridge the gap and present a new dataset for expressive and coherent story creation. We present the Sequential Storytelling Image Dataset (SSID), consisting of open-source video frames accompanied by story-like annotations. In addition, we provide four annotations (i.e., stories) for each set of five images. The image sets are collected manually from publicly available videos in three domains: documentaries, lifestyle, and movies, and then annotated manually using Amazon Mechanical Turk. In summary, SSID dataset is comprised of 17,365 images, which resulted in a total of 3,473 unique sets of five images. Each set of images is associated with four ground truths, resulting in a total of 13,892 unique ground truths (i.e., written stories). And each ground truth is composed of five connected sentences written in the form of a story.

Instructions:

The SSID dataset is comprised of 17,365 images, which resulted in a total of 3,473 unique sets of five images. Each set of images is associated with four ground truths, resulting in a total of 13,892 unique ground truths (i.e., written stories). And each ground truth is composed of five connected sentences written in the form of a story. Please go through the attached PDF file for additional Instructions details.

In reply to Hi, I need access to this by Chiranjib Bhat…

Subject: Request for DiDeMoSV Story Continuation Datasets Dear author of StoryDALL-E,

I am a researcher in the field of story generation. I am writing to request access to the DiDeMoSV story continuation datasets. These datasets would be of great value to my current research project as I strive to develop new story generation algorithms. I assure you that the datasets will be used solely for research purposes. Thank you for considering my request. I look forward to your positive response. Best regards, Ting Pan

In reply to Hi, I need access to this by Chiranjib Bhat…

https://github.com/zmmalakan/SSID-Dataset

In reply to I also need this data set for by Dilip kumar

https://github.com/zmmalakan/SSID-Dataset

In reply to how to match the set of by zhziqian Li

https://github.com/zmmalakan/SSID-Dataset

In reply to hey there i need this dataset by TEJA DALAYAI

https://github.com/zmmalakan/SSID-Dataset

Can you please grant access to the dataset for my NLP project.

In reply to Can you please grant access by Shantanu Pathak

https://github.com/zmmalakan/SSID-Dataset