A new dataset on eliciting behavioral data on ambiguity in monetary decisions including preferences which contradict CPT, CEU, SEU, ...etc. due to tail-separability paradoxes

- Citation Author(s):

- Submitted by:

- Camelia Al-Najjar

- Last updated:

- DOI:

- 10.21227/a8qn-d389

- Data Format:

202 views

202 views

- Categories:

- Keywords:

Abstract

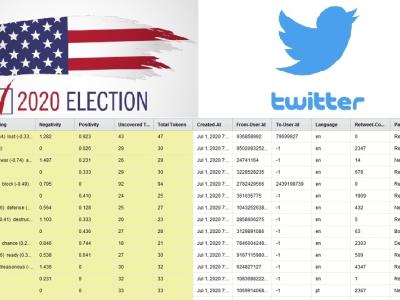

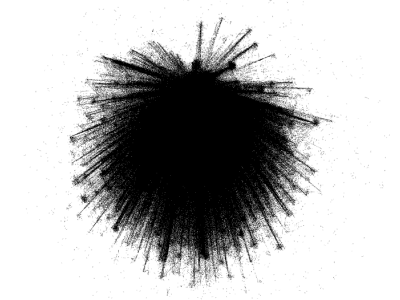

To test the effectiveness of different ambiguity models in representing real decision-making under ambiguity, we ran an incentivized experiment of choice under ambiguity. The study involved 310 participants recruited using the online international labor market, Amazon Mechanical Turk (MTurk), to participate in an experimental study implemented on the survey platform, Qualtrics. Each of the 310 subjects made 150 preference choices between two options involving variations of the four ambiguity problems with varying levels of ambiguity and risk. The payoffs were all positive (gains) and ranged between $0 and $10. To increase motivation for participants to provide their true preferences, part of the participant compensation was a random incentive mechanism commonly seen in the literature. With a fixed participation fee and a bonus based on the participant's response to a randomly selected decision choice, the average compensation was $6.14. The majority of subjects (87.6%) were located in the United States and 10.6% were located in India. Sixty percent of the subjects had some form of higher education, with the remainder mostly at the high school level. Subjects were 59% male. The estimation and testing of the models is implemented as a maximization of the log-likelihood function of each model. Eighty percent of the 150 decision choices are used for in-sample estimation, while 20% of choices are used for out-of-sample testing. In other words, we start with a training (or fitting) of each model to estimate parameters using a maximization of the model's log-likelihood on the randomly selected 118 in-sample set. The remaining 32 choices are the out-of-sample set used to test the model's prediction using the optimized parameters. The models are then compared by the best (or highest) log-likelihood on the out-of-sample set. Considering that an out-of-sample evaluation method is used, a higher number of parameters offers no advantage because it will deteriorate performance in the out-of-sample set due to overfitting on the in-sample set. The maximum likelihood estimation (MLE) is performed on Stata/IC 16 using the maximum likelihood linear (ml lf) command with custom user-written likelihood and prediction functions for every model tested. The dataset contains all STATA data and command files necessary to replicate the out-of-sample model prediction comparison on the data set.

Instructions:

Detailed 'Read Me' files and codebook documents are included in each folder of the *.ZIP file of the dataset. These files are provided in *.txt format and detail the contents and the description of the dataset.

◀