LLOT

- Citation Author(s):

-

Pengzhi ZhongXiaoyu GuoDefeng HuangXiaojun PengYian LiQijun ZhaoShuiwang Li

- Submitted by:

- Shuiwang Li

- Last updated:

- DOI:

- 10.21227/pd6f-am71

41 views

41 views

- Categories:

- Keywords:

Abstract

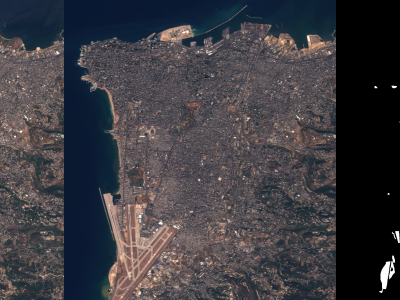

In recent years, the field of visual tracking has made significant progress with the application of large-scale training datasets. These datasets have supported the development of sophisticated algorithms, enhancing the accuracy and stability of visual object tracking. However, most research has primarily focused on favorable illumination circumstances, neglecting the challenges of tracking in low-ligh environments. In low-light scenes, lighting may change dramatically, targets may lack distinct texture features, and in some scenarios, targets may not be directly observable. These factors can lead to a severe decline in tracking performance. To address this issue, we introduce LLOT, a benchmark specifically designed for Low-Light Object Tracking. LLOT comprises 269 challenging sequences with a total of over 132K frames, each carefully annotated with bounding boxes. This specially designed dataset aims to promote innovation and advancement in object tracking techniques for low-light conditions, addressing challenges not adequately covered by existing benchmarks. To assess the performance of existing methods on LLOT, we conducted extensive tests on 39 state-of-the-art tracking algorithms. The results highlight a considerable gap in low-light tracking performance. In response, we propose H-DCPT, a novel tracker that incorporates historical and darkness clue prompts to set a stronger baseline. H-DCPT outperformed all 39 evaluated methods in our experiments, demonstrating significant improvements.

We hope that our benchmark and H-DCPT will stimulate the development of novel and accurate methods for tracking objects in low-light conditions.

Instructions:

LLOT is a benchmark specifically designed for Low-Light Object Tracking. LLOT comprises 269 challenging sequences with a total of over 132K frames, each carefully annotated with bounding boxes.