Code of Paper: Enhancing Information Freshness and Energy Efficiency in D2D Networks Through DRL-Based Scheduling and Resource Management

- Citation Author(s):

-

Parisa Parhizgar (Department of Electrical and Computer Engineering, Isfahan University of Technology, Isfahan)Mehdi Mahdavi

(Department of Electrical and Computer Engineering, Isfahan University of Technology, Isfahan)

- Submitted by:

- Parisa Parhizgar

- Last updated:

- DOI:

- 10.21227/xrxr-6b13

- Data Format:

472 views

472 views

- Categories:

- Keywords:

Abstract

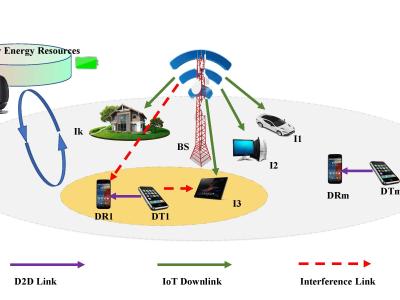

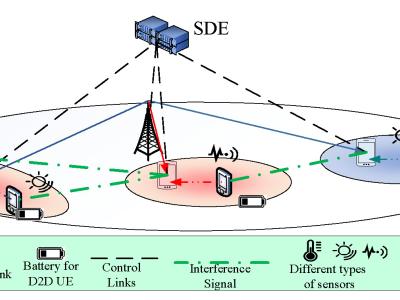

This paper investigates resource management in device-to-device (D2D) networks coexisting with mobile cellular user equipment (CUEs). We introduce a novel model for joint scheduling and resource management in D2D networks, taking into account environmental constraints. To preserve information freshness, measured by minimizing the average age of information (AoI), and to effectively utilize energy harvesting (EH) technology to satisfy the network’s energy needs, we formulate an online optimization problem. This formulation considers factors such as the quality of service (QoS) for both CUEs and D2Ds, available power, information freshness, and environmental sensing requirements. Due to the mixed-integer nonlinear nature and online characteristics of the problem, we propose a deep reinforcement learning (DRL) approach to solve it effectively. Numerical results show that the proposed joint scheduling and resource management strategy, utilizing the soft actor-critic (SAC) algorithm, reduces the average AoI by 20\% compared to other baseline methods.

Instructions:

We used Soft Actor Critic and Deep Deterministic Policy Gradient methods.