American Sign Language Dataset

- Citation Author(s):

-

Bhavy Kharbanda

- Submitted by:

- Bhavy Kharbanda

- Last updated:

- DOI:

- 10.21227/cbg0-7552

- Data Format:

4858 views

4858 views

- Categories:

- Keywords:

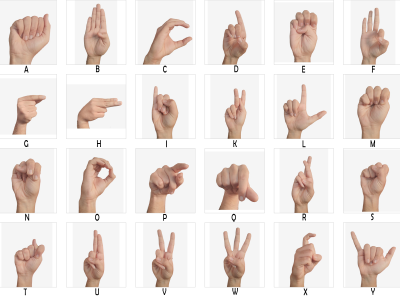

Abstract

Speech impairment constitutes a challenge to an individual's ability to communicate effectively through speech and hearing. To overcome this, affected individuals’ resort to alternative modes of communication, such as sign language. Despite the increasing prevalence of sign language, there still exists a hindrance for non-sign language speakers to effectively communicate with individuals who primarily use sign language for communication purposes. Sign languages are a class of languages that employ a specific set of hand gestures, movements, and postures to convey messages. Deaf and Hard-of-Hearing (DHH) people require some type of technology to convey their feelings or if I rephrase it, we are the people required to learn sign language to be able to understand what a DHH person is trying to convey, and with a motive to convey our concern towards the same, we are here with our project.

The main motive behind this project is to provide such users with a user-friendly interface to convey their messages to a person who doesn’t understand sign language using deep learning technology. The objective of this project is to conduct an analysis and recognition of various alphabets present in a database of sign images in real-time using the camera of the device to form sentences. This project will include the formation of a dataset on which such a model can be trained, that is we will be creating our dataset and won’t be using any existing one. Further, using Convolutional Neural nets will be used to train a classifier to predict the alphabet gestured by the user. As a further extension to the project, our interface will also include a feature to show exactly the opposite of the same. It will be able to generate a sign sequence on the basis of an input sentence in order to educate people about using sign language. This function will help the user to communicate with the DHH person using their own method i.e., Sign language.

Instructions:

Our dataset contains a series of different folders containing different format of images, like the root images, augmented images, preprocessed images (grayscale, histogram equalization, binarized images), and skeleton mediapipe landmark images for various classes of the alphabets.

Each folder is divided into two types of folders, each containing a different set of images which were segregated by the color complexion of the hands.

Root Images

Root signs that are used for further processing.

Augmented Data

Class Distribution Folder - Each class folder containing one image of the sign.

Root Image Clear BG - Root images with clear background.

Train Data - Artificially augmented data using various pipelines of parameters.

Pre-Processed Data

Edge Detection - Pre processed images on which Edge detection techniques are applied.

GrayScale Data - Grayscale images for the root images.

Histgram Data - Histogram equalization techniques were applied on the root images.

Pre processing Folder

It contais various pipelines for the pre processed data and two different sets of images for different skin complexions of signs.

Skeleton Data

It contains the mediapipe converted image for each sign and for each class resepctively.

Thank you

In reply to Thank you by Ron Deniele Paragoso

Glad to help!