Photoacoustic Source Detection and Reflection Artifact Deep Learning Dataset

- Citation Author(s):

-

Derek Allman (Johns Hopkins University)

- Submitted by:

- Derek Allman

- Last updated:

- DOI:

- 10.21227/H2ZD39

- Research Article Link:

3195 views

3195 views

- Categories:

- Keywords:

Abstract

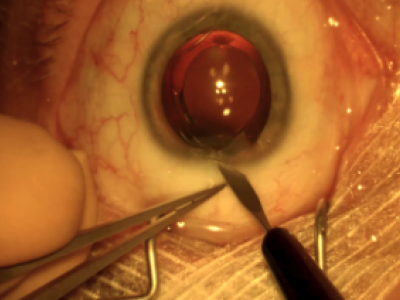

Interventional applications of photoacoustic imaging typically require visualization of point-like targets, such as the small, circular, cross-sectional tips of needles, catheters, or brachytherapy seeds. When these point-like targets are imaged in the presence of highly echogenic structures, the resulting photoacoustic wave creates a reflection artifact that may appear as a true signal. We propose to use deep learning techniques to identify these type of noise artifacts for removal in experimental photoacoustic data. To achieve this goal, a convolutional neural network (CNN) was first trained to locate and classify sources and artifacts in pre-beamformed data simulated with k-Wave. Simulations initially contained one source and one artifact with various medium sound speeds and 2D target locations. Based on 3,468 test images, we achieved a 100% success rate in classifying both sources and artifacts. After adding noise to assess potential performance in more realistic imaging environments, we achieved at least 98% success rates for channel signal-to-noise ratios (SNRs) of -9dB or greater, with a severe decrease in performance below -21dB channel SNR. We then explored training with multiple sources and two types of acoustic receivers and achieved similar success with detecting point sources. Networks trained with simulated data were then transferred to experimental waterbath and phantom data with 100% and 96.67% source classification accuracy, respectively (particularly when networks were tested at depths that were included during training). The corresponding mean ± one standard deviation of the point source location error was 0.40 ± 0.22 mm and 0.38 ± 0.25 mm for waterbath and phantom experimental data, respectively, which provides some indication of the resolution limits of our new CNN-based imaging system. We finally show that the CNN- based information can be displayed in a novel artifact-free image format, enabling us to effectively remove reflection artifacts from photoacoustic images, which is not possible with traditional geometry-based beamforming.

Instructions:

Our paper demonstrates the benefits of using deep convolutional neural networks as an alternative to traditional model-based photoacoustic beamforming and data reconstruction techniques [1]. To foster reproducibility and future comparisons, our trained code, a few of our experimental datasets, and instructions for use are freely available on IEEE DataPort [2]. Our code contains three main components, (1) the simulation component, (2) the neural network component and (3) the analysis component. All of the code for these three components can be found in the GitHub repository associated with our paper [3].

The simulation component relies on the MATLAB toolbox k-Wave to simulate sources for specified medium sound speeds, 2D locations, and transducer parameters. Once the photoacoustic sources are simulated, the sources are shifted and superimposed to generate a database of images representing photoacoustic channel data containing sources and artifacts. Along with the channel data images, annotation files are generated which indicate the location and class (i.e., source or artifact) of the objects in the image. This database can then be used to train a network.

The neural network component is written in the Caffe Framework. The repository contains all necessary files to run the neural network processing. For instructions on how to set up your machine to run this program please see the original Faster-RCNN code repository [4]. To train a network, a dataset of simulated channel data images along with the annotation files containing the locations of all objects in the images must be input to the network. The network then processes the training data and outputs the trained network. When testing, channel data is input to the network which then processes the images and outputs a list of detections with confidence scores and bounding boxes. The IEEE Data Port repository [2] for this project contains a pre-trained network along with the dataset used to generate it and as well as the waterbath and phantom experimental channel data used in our paper.

Analysis code is also available on the GitHub repository [3]. The analysis code is intended to be used when testing performance on simulated data by evaluating detections made by the network and outputting information regarding classification, misclassification, and missed detection rate as well as error. All experimental data was analyzed by hand.

More detailed instructions can be found in their respective repositories.

[1] Allman D, Reiter A, Bell MAL, Photoacoustic source detection and reflection artifact removal enabled by deep learning, IEEE Transactions on Medical Imaging, 37(6):1464-1477, 2018

[3] https://github.com/derekallman/Photoacoustic-FasterRCNN

[4] https://github.com/rbgirshick/py-faster-rcnn

If you use the data/code for research, please cite [1]. For commercial use, please contact mledijubell@jhu.edu to discuss the related IP.