Fine-Grained Balanced Cyberbullying Dataset

- Citation Author(s):

-

Jason WangKaiqun FuChang-Tien Lu

- Submitted by:

- Jason Wang

- Last updated:

- DOI:

- 10.21227/kn1c-zx22

- Data Format:

- Research Article Link:

- Links:

5069 views

5069 views

- Categories:

- Keywords:

Abstract

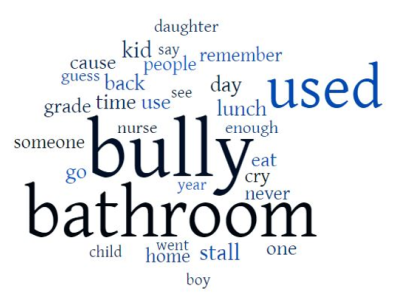

Amidst the COVID-19 pandemic, cyberbullying has become an even more serious threat. Our work aims to investigate the viability of an automatic multiclass cyberbullying detection model that is able to classify whether a cyberbully is targeting a victim’s age, ethnicity, gender, religion, or other quality. Previous literature has not yet explored making fine-grained cyberbullying classifications of such magnitude, and existing cyberbullying datasets suffer from quite severe class imbalances. To combat these challenges, we establish a framework for the automatic generation of balanced data by using a semi-supervised online Dynamic Query Expansion (DQE) process to extract more natural data points of a specific class from Twitter. We also propose a Graph Convolutional Network (GCN) classifier, using a graph constructed from the thresholded cosine similarities between tweet embeddings. With our DQE-augmented dataset, which we have made publicly available, we compare our GCN model using eight different tweet embedding methods and six other classification models over two sizes of datasets. Our results show that our proposed GCN model matches or exceeds the performance of the baseline models, as indicated by McNemar statistical tests.

Instructions:

Please cite the following paper when using this open access dataset:

J. Wang, K. Fu, C.T. Lu, “SOSNet: A Graph Convolutional Network Approach to Fine-Grained Cyberbullying Detection,” Proceedings of the 2020 IEEE International Conference on Big Data (IEEE BigData 2020), pp. 1699-1708, December 10-13, 2020. DOI: 10.1109/BigData50022.2020.9378065

This is a "Dynamic Query Expansion"-balanced dataset containing .txt files with 8000 tweets for each of a fine-grained class of cyberbullying: age, ethnicity, gender, religion, other, and not cyberbullying.

Total Size: 6.33 MB

Includes some data from:

S. Agrawal and A. Awekar, “Deep learning for detecting cyberbullying across multiple social media platforms,” in European Conference on Information Retrieval. Springer, 2018, pp. 141–153.

U. Bretschneider, T. Wohner, and R. Peters, “Detecting online harassment in social networks,” in ICIS, 2014.

D. Chatzakou, I. Leontiadis, J. Blackburn, E. D. Cristofaro, G. Stringhini, A. Vakali, and N. Kourtellis, “Detecting cyberbullying and cyberaggression in social media,” ACM Transactions on the Web (TWEB), vol. 13, no. 3, pp. 1–51, 2019.

T. Davidson, D. Warmsley, M. Macy, and I. Weber, “Automated hate speech detection and the problem of offensive language,” arXiv preprint arXiv:1703.04009, 2017.

Z. Waseem and D. Hovy, “Hateful symbols or hateful people? predictive features for hate speech detection on twitter,” in Proceedings of the NAACL student research workshop, 2016, pp. 88–93.

Z. Waseem, “Are you a racist or am i seeing things? annotator influence on hate speech detection on twitter,” in Proceedings of the first workshop on NLP and computational social science, 2016, pp. 138–142.

J.-M. Xu, K.-S. Jun, X. Zhu, and A. Bellmore, “Learning from bullying traces in social media,” in Proceedings of the 2012 conference of the North American chapter of the association for computational linguistics: Human language technologies, 2012, pp. 656–666.