RGBT234

- Citation Author(s):

-

Chenglong Li

- Submitted by:

- Weidai Xia

- Last updated:

- DOI:

- 10.21227/hqj7-rd94

55 views

55 views

- Categories:

- Keywords:

Abstract

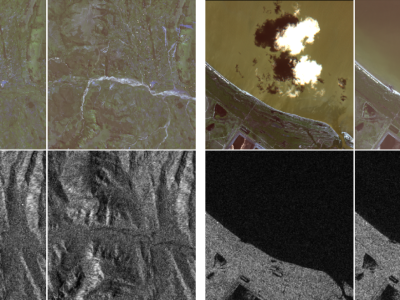

This dataset is a large-scale video benchmark constructed for RGB-Thermal (RGB-T) object tracking tasks, featuring the following key characteristics:

1. **Scale & Diversity**

- Contains 234,000 total frames, with sequences up to 8,000 frames

- Covers diverse scenarios and complex environmental conditions

- Currently the largest publicly available RGB-T dataset in the field

2. **Precise Multimodal Alignment**

- Strict spatiotemporal synchronization between RGB and thermal sequences

- Requires no pre/post-processing for direct usage

- Ensures cross-modal data consistency for reliable comparisons

3. **Granular Annotation System**

- Frame-level bounding box annotations

- Specially labeled occlusion levels for tracked objects

- Enables occlusion-sensitive analysis and robustness evaluation

4. **Core Innovations**

- Breaks scale limitations of existing datasets for comprehensive evaluation

- First benchmark with pixel-level multimodal alignment accuracy

- Introduces quantitative occlusion analysis as a new dimension

- Provides standardized validation for multimodal fusion algorithms

The dataset addresses longstanding evaluation challenges in RGB-T tracking by offering:

- Precisely aligned cross-modal data streams

- Fine-grained occlusion annotations

- Large-scale sample capacity

It enables in-depth research on:

- Effectiveness verification of multimodal feature fusion

- Sustained performance assessment for long-term tracking

- Algorithm robustness testing under varying occlusion levels

- Benchmark comparisons for cross-modal representation learning

As the first comprehensive RGB-T tracking benchmark, it establishes new research paradigms and reliability validation standards, significantly advancing visible-thermal fusion tracking technologies.

Instructions:

This dataset is a large-scale video benchmark constructed for RGB-Thermal (RGB-T) object tracking tasks, featuring the following key characteristics:

1. **Scale & Diversity**

- Contains 234,000 total frames, with sequences up to 8,000 frames

- Covers diverse scenarios and complex environmental conditions

- Currently the largest publicly available RGB-T dataset in the field

2. **Precise Multimodal Alignment**

- Strict spatiotemporal synchronization between RGB and thermal sequences

- Requires no pre/post-processing for direct usage

- Ensures cross-modal data consistency for reliable comparisons

3. **Granular Annotation System**

- Frame-level bounding box annotations

- Specially labeled occlusion levels for tracked objects

- Enables occlusion-sensitive analysis and robustness evaluation

4. **Core Innovations**

- Breaks scale limitations of existing datasets for comprehensive evaluation

- First benchmark with pixel-level multimodal alignment accuracy

- Introduces quantitative occlusion analysis as a new dimension

- Provides standardized validation for multimodal fusion algorithms

The dataset addresses longstanding evaluation challenges in RGB-T tracking by offering:

- Precisely aligned cross-modal data streams

- Fine-grained occlusion annotations

- Large-scale sample capacity

It enables in-depth research on:

- Effectiveness verification of multimodal feature fusion

- Sustained performance assessment for long-term tracking

- Algorithm robustness testing under varying occlusion levels

- Benchmark comparisons for cross-modal representation learning

As the first comprehensive RGB-T tracking benchmark, it establishes new research paradigms and reliability validation standards, significantly advancing visible-thermal fusion tracking technologies.