Datasets

Standard Dataset

MIMOGR:MIMO millimeter wave radar multi-feature dataset for gesture recognition

- Citation Author(s):

- Submitted by:

- qin chen

- Last updated:

- Thu, 11/24/2022 - 22:39

- DOI:

- 10.21227/szqd-6772

- Data Format:

- License:

805 Views

805 Views- Categories:

- Keywords:

Abstract

Radar-based dynamic gesture recognition has a broad prospect in the field of touchless Human-Computer Interaction (HCI) due to its advantages in many aspects such as privacy protection and all-day working. Due to the lack of complete motion direction information, it is difficult to implement existing radar gesture datasets or methods for motion direction sensitive gesture recognition and cross-domain (different users, locations, environments, etc.) recognition tasks. Therefore, this paper constructs a sensitive feature map dataset of RT, DT, ART, ERT and RDT based on the range velocity, altitude and azimuth information of MIMO millimeter wave radar. A total of 7 types of gestures can be collected, namely waving up, waving down, waving left, waving right, waving forward, waving backward, and double-tap. To prepare a realistic dataset, we employ two data collection strategies: collecting gesture samples from various volunteers and collecting gesture data in various scenarios to enrich our sample.

The dataset for this procedure contains two parts: a volunteer-based dataset and a scene-based dataset(abbreviated as dataV and dataS). dataV consists of seven sets of gestures performed by each of the 10 volunteers in an ideal indoor setting. We labeled these 10 volunteers as P1 to P10, where volunteers P1-P5 were familiar with our defined gestures before the experiment, including the distance from the gesture to the radar, the amplitude and speed of the gesture, etc. During the data collection process, volunteers P1-P5 were asked to perform as many standard gestures as possible. In contrast to the first group, volunteers P6-P10 were not exposed to the system. They were only informed of the system collection process through a brief demonstration by the experimenter, and performed the gestures as naturally as possible. Although the number of volunteers involved was relatively small, we obtained a total of 6847 samples through a long collection period. The scenario-based dataset, mainly used to validate and improve the generalization performance of our method, consists of 2800 samples from a variety of complex environments and random users, which are closer to real applications.

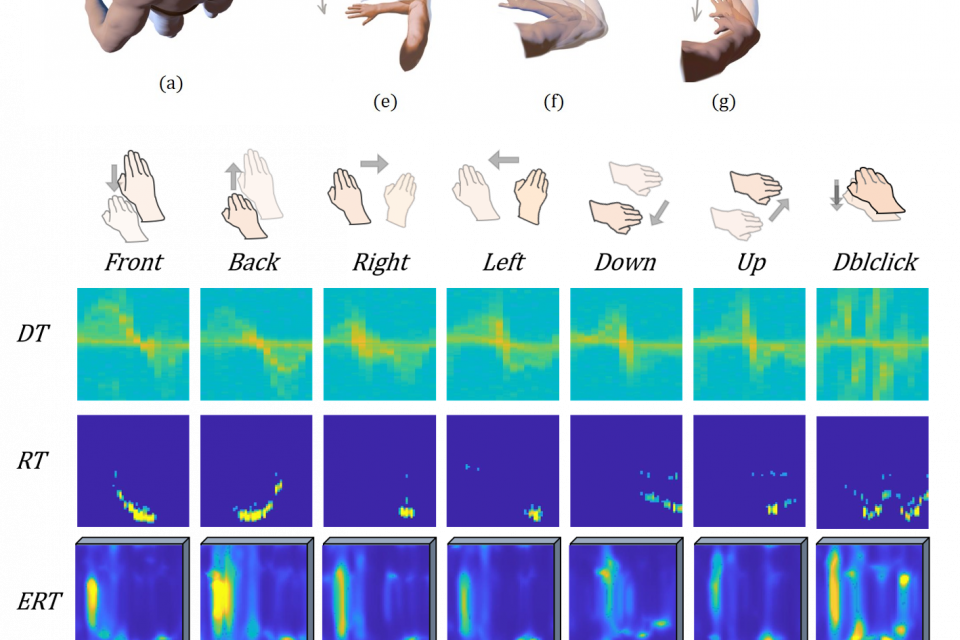

Each sample contains five features, including DT, RT, RDT, ERT, and ART feature. DT and RT features are in the format of a 64*64 2D matrix, RDT features are in the format of a 64*64*12 3D matrix, and ERT and ART features are in the format of a 91*64*12 3D matrix, and the data visualization is shown in Figure 10, where ERT, ART, and RDT are the results of multi-frame superposition. From the perspective of intuitive human-computer interaction, the following seven general gestures are designed: waving up, waving down, waving left, waving right, waving forward, waving backward, and double-tap, whose combination can realize most of the human-computer interaction actions.

Comments

can I have dataset?