AMagPoseNet

- Citation Author(s):

-

Shijian Su (CUHK)Sishen Yuan (CUHK)Mengya Xu (NUS)Huxin Gao (NUS)Hongliang Ren (CUHK)

- Submitted by:

- shijian su

- Last updated:

- DOI:

- 10.21227/2d00-td57

- Data Format:

344 views

344 views

- Categories:

- Keywords:

Abstract

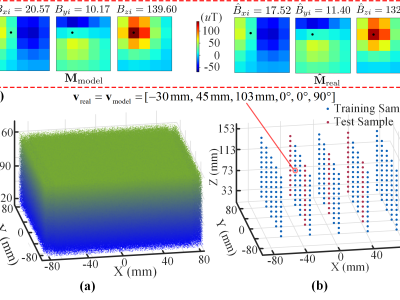

Traditional magnetic tracking approaches based on mathematical models and optimization algorithms are computationally intensive, depend on initial guesses, and do not guarantee convergence to a global optimum. Although fully-supervised data-driven deep learning can solve the above issues, the demand for a comprehensive dataset hampers its applicability in magnetic tracking. Thus, we propose an annular magnet pose estimation network (called AMagPoseNet) based on dual-domain few-shot learning from a prior mathematical model, which consists of two sub-networks: PoseNet and CaliNet. PoseNet learns to estimate the magnet pose from the prior mathematical model, and CaliNet is designed to narrow the gap between the mathematical model domain (MMD) and the real-world domain (RWD). Experimental results reveal that AMagPoseNet outperforms the optimization-based method regarding localization accuracy (1.87±1.14 mm, 1.89±0.81°), robustness (non-dependence on initial guesses), and computational latency (2.08±0.02 ms). In addition, the six degrees of freedom (6-DoF) pose of the magnet could be estimated when discriminative magnetic field features are provided. With the assistance of the mathematical model, AMagPoseNet requires only a few real-world samples and has excellent performance, showing great potential for practical biomedical and industrial applications.

Instructions:

### dataset for AMagPoseNet

Folder structure

.

├─generated dataset # please use the NumPy package to load the *.npy files

└─real-world dataset

File formate

- Each row represents one sample

Sample format

- sensor1_x, sensor1_y, sensor1_z, sensor2_x, sensor2_y, sensor2_z, ..., sensor25_x, sensor25_y, sensor25_z, pos_x, pos_y, pos_z, alpha, beta, gamma, (Bt)

In reply to 1 by Bowen Lv

1