SOTIF PCOD

- Citation Author(s):

- Submitted by:

- milin patel

- Last updated:

- DOI:

- 10.21227/j43q-z578

- Data Format:

122 views

122 views

- Categories:

- Keywords:

Abstract

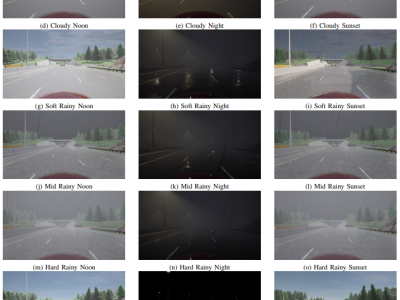

Safety of the Intended Functionality (SOTIF) addresses sensor performance limitations and deep learning-based object detection insufficiencies to ensure the intended functionality of Automated Driving Systems (ADS). This paper presents a methodology examining the adaptability and performance evaluation of the 3D object detection methods on a LiDAR point cloud dataset generated by simulating a SOTIF-related Use Case. The major contributions of this paper include defining and modeling a SOTIF-related Use Case with 21 diverse weather conditions and generating a LiDAR point cloud dataset suitable for application of 3D object detection methods. The dataset consists of 547 frames, encompassing clear, cloudy, rainy weather conditions, corresponding to different times of the day, including noon, sunset, and night. Employing MMDetection3D and OpenPCDET toolkits, the performance of State-of-the-Art (SOTA) 3D object detection methods is evaluated and compared by testing the pre-trained Deep Learning (DL) models on the generated dataset using Average Precision (AP) and Recall metrics.

Instructions:

In the SOTIF-related use case, a diverse range of weather conditions is considered to test the functionality of the LIDAR sensor equipped on the Ego-Vehicle operating on a multi-lane highway. These conditions are segmented into different times of the day: Noon, Night, and Sunset, to simulate various driving scenarios. For each time of day, specific weather conditions are defined to generate a comprehensive dataset for testing.

At Noon, the weather conditions include ClearNoon, representing ideal visibility; CloudyNoon, introducing diffused lighting; WetNoon, featuring reflective lane surfaces due to moisture; WetCloudyNoon, a combination of moisture and diffused lighting; MidRainyNoon, representing moderate rainfall; HardRainNoon, simulating heavy rainfall; and SoftRainNoon, for light rainfall scenarios.

During the Night, the conditions are similar but adjusted for the absence of natural light: ClearNight, CloudyNight, WetNight, WetCloudyNight, MidRainyNight, HardRainNight, and SoftRainNight. These conditions test the LIDAR sensor's performance in darkness, with varying degrees of precipitation and surface wetness.

At Sunset, the dataset includes ClearSunset, CloudySunset, WetSunset, WetCloudySunset, MidRainSunset, HardRainSunset, and SoftRainSunset. These conditions are designed to challenge the LIDAR sensor under the unique lighting conditions of sunset, combined with different weather phenomena.

This detailed categorization of weather conditions across different times of day is aimed at ensuring the Ego-Vehicle's LIDAR sensor can accurately detect and respond to surrounding vehicles under a wide array of environmental conditions, thereby maintaining safety and reliability without requiring driver intervention.

need