Datasets

Standard Dataset

Radar-based Hand Gestures Sensed through Multiple Materials

- Citation Author(s):

- Submitted by:

- Arthur Sluyters

- Last updated:

- Mon, 09/30/2024 - 12:16

- DOI:

- 10.21227/ay3p-sh70

- Research Article Link:

- Links:

- License:

234 Views

234 Views- Categories:

- Keywords:

Abstract

Radar signals can penetrate non-conducting materials, such as glass, wood, and plastic, which could enable the recognition of user gestures in environments with poor visibility, occlusion, limited accessibility, and privacy sensitivity. This dataset contains nine gestures from 32 participants recorded with a radar through 3 different materials (wood, glass, and PVC) to explore the feasibility of sensing gestures through materials.

Introduction

The dataset is composed of raw and preprocessed gesture recordings. It consists of nine frequent gestures selected based on our experience with radar-based gestures:

- Open hand

- Close hand

- Swipe right

- Swipe left

- Draw infinity

- Push fist

- Push palm

- Pull palm

- Knock three times

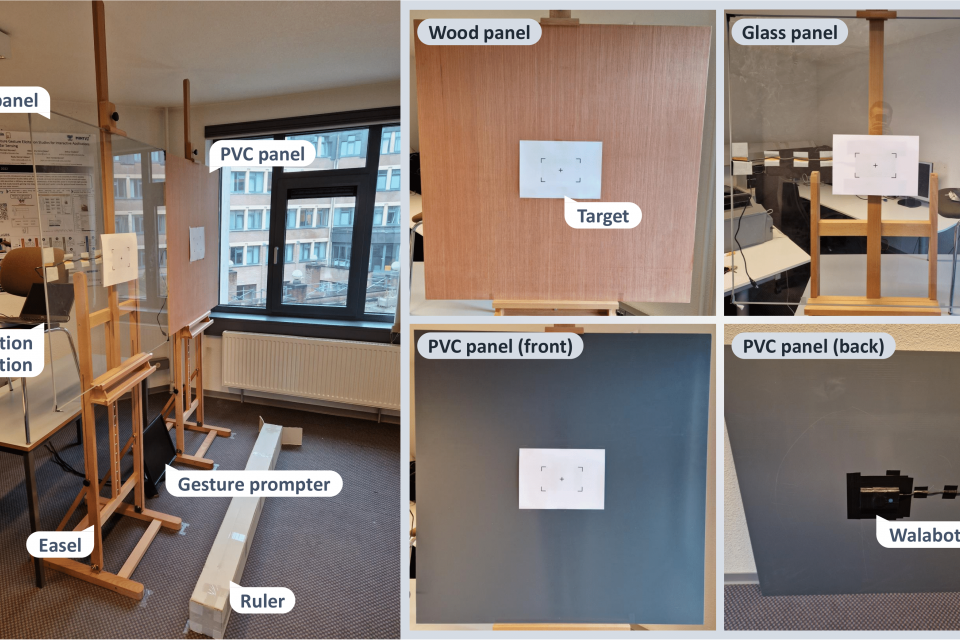

All gestures were recorded with three Walabot Developer devices, each attached to the back of a 1 m x 1 m plate of a different material:

- Wood (1.7 cm thick)

- PVC (0.9 cm thick)

- Glass (0.5 cm thick)

Each material was placed on an easel with its height adjusted so that the participant's right hand faced the Walabot with their arm extended. The distance between the participant's hand and the material was 20 cm.

The Walabots were configured with the PROF_SENSOR_NARROW profile, with 6.3-8 GHz range, 12 antenna pairs, 4096 fast-time samples/frame, and ~41 fps recording. The data was truncated to keep only the first 1024 fast-time samples out of the 4096 samples in each frame.

Participants performed gestures while standing up in front of the material. The recording procedure was as follows: show the gesture to the participant, then, for each sample,

- The participant starts with their arms alongside their body

- The recording is started

- The participant produces the gesture

- The participant places their arms back alongside their body

- The recording is stopped and the data saved to a text file.

This action sequence was repeated for each repetition of each gesture and each material

Each gesture was performed five times by 32 participants in a controlled environment, resulting in a total of 9 (gestures) x 32 (participants) x 5 (repetitions) = 1440 samples per type of material.

"Raw radar data.zip" archive

Directory structure

-

USR_ID_1

- antennapairs.out: list of antenna pairs used for the recording

- WalabotSignal_User_USER_ID_1_Gesture_GESTURE_ID_1-SAMPLE_ID_1.out

- ...

- WalabotSignal_User_USER_ID_N_Gesture_GESTURE_ID_9-SAMPLE_ID_5.out

- ...

- USER_ID_N

Out files structure

Time domain data. 1024 fast-time samples per frame, 12 pairs of antennas, variable number of frames per gesture. Gestures ar recorded at about 40 frames per second.

Each file consists of a set of lines, where each lines has 2 columns. The first column is the fast time and the scond column is the amplitude of the signal measured at the time.

Lines are ordered as follows:

- FRAME_1_ANTENNA_PAIR_1_FT_1

- ...

- FRAME_1_ANTENNA_PAIR_1_FT_1024

- ...

- ...

- FRAME_1_ANTENNA_PAIR_12_FT_1

- ...

- FRAME_1_ANTENNA_PAIR_12_FT_1024

- ...

- ...

- FRAME_N_ANTENNA_PAIR_1_FT_1

- ...

- FRAME_N_ANTENNA_PAIR_1_FT_1024

- ...

- ...

- FRAME_N_ANTENNA_PAIR_12_FT_1

- ...

- FRAME_N_ANTENNA_PAIR_12_FT_1024

"Quantumleap data.zip" archive

Background Subtraction

Directory structure

-

GESTURE_ID_1

- USER_ID_1

- GESTURE_ID_1-SAMPLE_ID_1.json

- ...

- GESTURE_ID_1-SAMPLE_ID_10.json

- ...

- USER_ID_N

- ...

- GESTURE_ID_9

Json files structure (

- "name": "GESTURE_ID",

- "subject": "USER_ID",

- "paths": a list of path elements, one per pair of antennas

- "label": "ANTENNA_PAIR_NAME"

- "strokes": a list containing one stroke element

- "id": STROKE_ID

- "points": a list of point elements, one per frame

- "coordinates": frequency-domain signal, as a vector. The first half of the vector represents the real part of the complex signal. The second half represents the imaginary values of the complex signal.

- "t": "FRAME_ID"

Filtering

Directory structure

-

GESTURE_ID_1

- USER_ID_1

- GESTURE_ID_1-SAMPLE_ID_1.json

- ...

- GESTURE_ID_1-SAMPLE_ID_10.json

- ...

- USER_ID_N

- ...

- GESTURE_ID_9

Json files structure

- "name": "GESTURE_ID",

- "subject": "USER_ID",

- "paths": a list of path elements, one per pair of antennas

- "label": "ANTENNA_PAIR_NAME"

- "strokes": a list containing one stroke element

- "id": STROKE_ID

- "points": a list of point elements, one per frame

- "x": distance [m]

- "y": relative permittivity

- "t": "FRAME_ID"