Datasets

Standard Dataset

Public Perceptions of Artificial Intelligence in Cultural and Media Content: A Field Study of 500 Arab Participants

- Citation Author(s):

- Submitted by:

- Wael Maged Badawy

- Last updated:

- Thu, 04/10/2025 - 14:38

- DOI:

- 10.21227/4jx1-a339

- Data Format:

- License:

Abstract

<p><span style="font-size: medium;">As artificial intelligence (AI) technologies rapidly integrate into cultural and media content production, questions arise about how the Arab public perceives, trusts, and engages with AI-generated content. This study investigates the perceptions of 500 participants from across the Arab world through a structured survey focusing on awareness, trust, cultural values, and the influence of algorithms on media behavior. Results show a clear discrepancy between the high algorithmic influence on user behavior and the low levels of trust in AI-generated content. Moreover, the findings reveal substantial concerns about cultural identity preservation and the authenticity of AI content. This paper concludes with practical recommendations for ensuring responsible use of AI in Arab media ecosystems.</span></p>

Public Perceptions of Artificial Intelligence in Cultural and Media Content: A Field Study of 500 Arab Participants

Abstract

As artificial intelligence (AI) technologies rapidly integrate into cultural and media content production, questions arise about how the Arab public perceives, trusts, and engages with AI-generated content. This study investigates the perceptions of 500 participants from across the Arab world through a structured survey focusing on awareness, trust, cultural values, and the influence of algorithms on media behavior. Results show a clear discrepancy between the high algorithmic influence on user behavior and the low levels of trust in AI-generated content. Moreover, the findings reveal substantial concerns about cultural identity preservation and the authenticity of AI content. This paper concludes with practical recommendations for ensuring responsible use of AI in Arab media ecosystems.

Keywords: Artificial Intelligence, Cultural Content, Media Trust, Deepfake, Algorithmic Bias, Arab Audience, Digital Awareness

1. Introduction

The integration of artificial intelligence into content production has revolutionized journalism, cultural programming, digital storytelling, and media recommendation systems. Tools like ChatGPT, Midjourney, and Sora AI now generate full articles, suggest content based on user behavior, and simulate human creativity with stunning realism.

However, these advances raise pressing questions. How does the public perceive this shift? Do people trust AI-generated content, and how do they evaluate its cultural authenticity? This study seeks to answer such questions through empirical evidence gathered from Arab users—providing a unique insight into AI's social and ethical footprint in regional media.

2. Methodology

- Approach: Descriptive-analytical, using quantitative and qualitative survey techniques.

- Sample: 500 participants from 7 Arab countries.

- Age groups: Under 18 to over 45 years.

- Domains: Media, Education, IT, and Other fields.

- Instrument: An 8-question online survey covering demographics, media trust, and AI awareness.

Data was analyzed using Python to calculate frequencies, generate visualizations, and support interpretation of attitudes toward AI in cultural content.

3. Results & Analysis

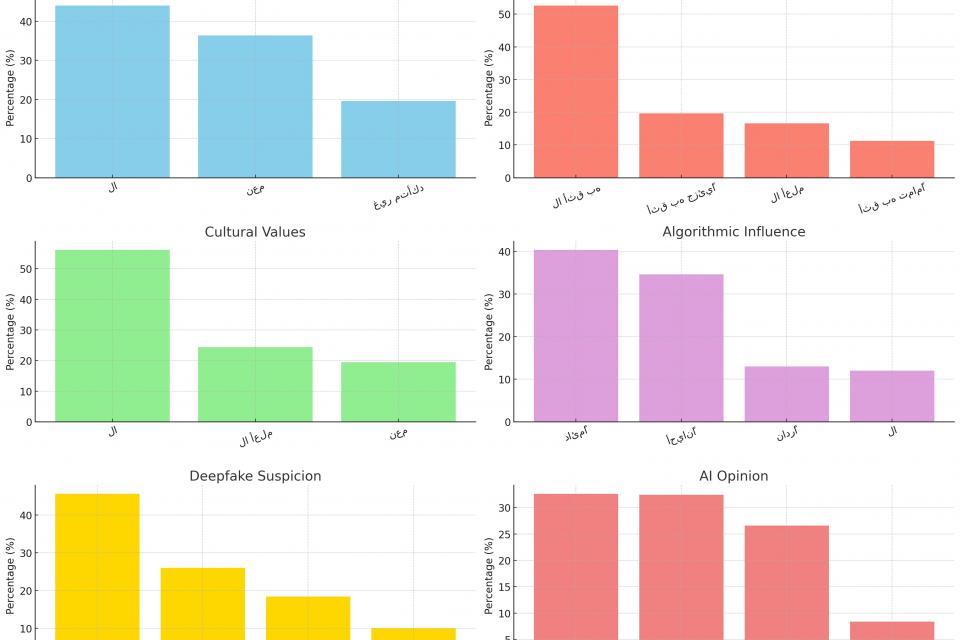

3.1. Participant Demographics

- Most common age group: 26–35 years (approx. 32%)

- Most common field: Media & Journalism (approx. 38%)

3.2. Trust and Awareness of AI Content

- 47% could not distinguish between human- and AI-generated content.

- 51% do not trust AI-generated media content.

- 56% believe AI does not preserve cultural values.

- 73% are influenced by algorithmic content suggestions on social media.

3.3. Deepfakes and Perceived Authenticity

- 70% of participants suspected AI involvement in media content at some point.

- 35% viewed AI as creative and helpful, while 25% saw it as a threat to cultural identity.

4. Discussion

The data reveals a cognitive dissonance: users are highly influenced by AI-driven content yet remain skeptical of its trustworthiness and authenticity. The fact that nearly half cannot distinguish AI from human output suggests a growing opacity in content origins, which weakens public accountability.

Furthermore, concerns over cultural dilution and algorithmic bias highlight the urgency for localization and ethical development of AI systems in the Arab world.

5. Recommendations

To bridge the gap between innovation and public responsibility, this study proposes:

- Algorithmic Transparency

Platforms should reveal why content is suggested and give users control over recommendations. - Cultural Data Sets & Ethical AI Training

Support the creation of Arab-language corpora for training culturally-aware AI. - Legislative Reform

Develop copyright and data protection laws that define AI-generated content ownership and protect user privacy. - Digital Media Literacy

Introduce AI-awareness in education, train journalists on AI tools, and raise public understanding of deepfakes. - Independent Verification Platforms

Establish fact-checking systems that use AI to counter AI-generated misinformation.

6. Conclusion

AI has become a powerful tool in reshaping cultural and media content production. Yet, this power must be governed responsibly. The Arab public's concerns—reflected in this study—point to a critical need for transparent, ethical, and culturally respectful use of AI in media environments.

By aligning innovation with accountability, we can move toward a media future that balances creativity with identity, and automation with human values.

41 Views

41 Views