AI-Based Secure NOMA and Cognitive Radio enabled Green Communications: Channel State Information and Battery Value Uncertainties

- Citation Author(s):

-

Saeed SheikhzadehMohammad Reza JavanNader MokariEduard A. Jorswieck

- Submitted by:

- Mohsen Pourghasemian

- Last updated:

- DOI:

- 10.21227/d95p-qz45

- Research Article Link:

529 views

529 views

- Categories:

Abstract

Instructions:

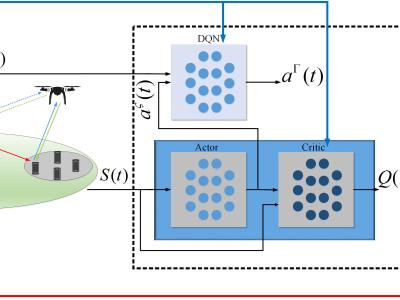

This repository includes the DDPG, MADDPG, MASRDDPG, and RDPG deep reinforcement learning based on the paper "AI-Based Secure NOMA and Cognitive Radio enabled Green Communications: Channel State Information and Battery Value Uncertainties".

Instructions:

## Special thanks to Dr. Phil Winder ##

## Dependencies

- Python 3.6+ (tested with 3.6 and 3.7)

- tensorflow 1.15+

- gym

- numpy

- networkx

- matplotlib

# System minimum requirements

-CPU: Intel Corei3 at 2.0 GHz.

-RAM: 4 GB.

# Preparing

All environments written on top of gym environment. There are 12 different environment for MASRDDPG, MADDPG, RDPG, and DDPG with three uncertainty approaches as:

"AI_GreenComm_TS_NOMA_W.py" (Worst case)

"AI_GreenComm_TS_NOMA_S.py", (Stochastic)

"AI_GreenComm_TS_NOMA_B.py", (Bernstein)

in the repository.

You need to add these environments into gym environment and register them. For creating and registering gym environment, please visit the URL below:

https://towardsdatascience.com/creating-a-custom-openai-gym-environment-for-stock-trading-be532be3910e

After adding those environments, the code is prepared for execution.

#################### Example Usage######################

For running each method, go to the relevant directory for each method, i.e., MASRDDPG or RDPG, and change the ‘env_name’ in gym.make(‘env_name’) in main.py file based on the name that you registered in gym. Then, simply type the following command:

$ python main.py

The code for each method generates some text files related to instantaneous and cumulative secrecy rate, energy consumption, reward, and other values in the "instant" and "cumulative" directories, respectively. You need to create these directories.

Finally, you can easily plot the results by importing the text files in to excel or MATLAB.