Dataset Entries from this Author

asdsafasfdsadasdsadas

- Categories:

CAD-EdgeTune dataset is acquired using a Husarion ROSbot 2.0 and ROSbot 2.0 Pro with the collection speed set to 5 frames per second from a suburban university environment. We may split the information into subgroups for noon, dusk, and dawn in order to depict our surroundings under various lighting situations. We have assembled 17 sequences totaling 8080 frames, of which 1619 have been manually analyzed using an open-source pixel annotation program. Since nearby photographs are highly similar to one another, we decide to annotate every five images.

- Categories:

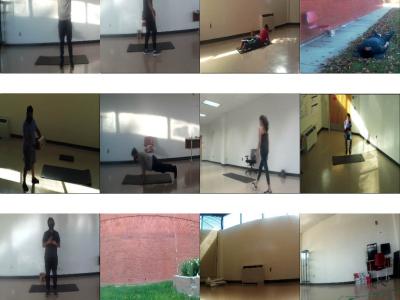

Deep video representation learning has recently attained state-of-the-art performance in video action recognition. However, when used with video clips from varied perspectives, the performance of these models degrades significantly. Existing VAR models frequently simultaneously contain both view information and action attributes, making it difficult to learn a view-invariant representation.

- Categories:

Deep video representation learning has recently attained state-of-the-art performance in video action recognition. However, when used with video clips from varied perspectives, the performance of these models degrades significantly. Existing VAR models frequently simultaneously contain both view information and action attributes, making it difficult to learn a view-invariant representation.

- Categories: