Datasets

Standard Dataset

Dataset of BPNN-based Image Restoration Algorithm Optimized using Hybrid Genetic Algorithm

- Citation Author(s):

- Submitted by:

- Qiqi Gao

- Last updated:

- Tue, 07/18/2023 - 08:16

- DOI:

- 10.21227/1te5-d856

- License:

285 Views

285 Views- Categories:

- Keywords:

Abstract

This dataset consists of a test result dataset with 10 sample images and a test result dataset with a artificial image. Backpropagation neural networks (BPNNs) can be used to restore images; however, the error surface of the BPNN algorithm contains several extrema, making it easy to slip into a locally optimal solution. A genetic algorithm (GA) with a strong global searchability can optimize the initial weight and threshold of BPNNs. However, traditional GAs are prone to local convergence and stagnation; hence, we propose a hybrid GA. First, the hybrid GA introduces an elite opposition-based learning strategy to increase population diversity and avoid premature maturation. Second, the firefly al-gorithm updates mutated individuals twice. Thus, the searchability of the algorithm in the vicinity of the optimal solution is increased. The results show that the BPNN-based image restoration al-gorithm optimized using the improved genetic algorithm (IGABPR) is better than the BPNN-based image restoration (BPR) algorithm and the BPNN-based image restoration algorithm optimized using the genetic algorithm (GABPR) in terms of PSNR and MSE metrics.

Instructions for accessing the dataset using matlab are given below:

- %% ---------Description of paper data supporting documents---------

- %% 5.1 Comparison of the optimization results of GA and IGA for BPNNs

- %%Program1:Paper_2GA_PK_all_image_multi_run_static.m

- % Table 2 Comparison of GABP and IGABP fitness values

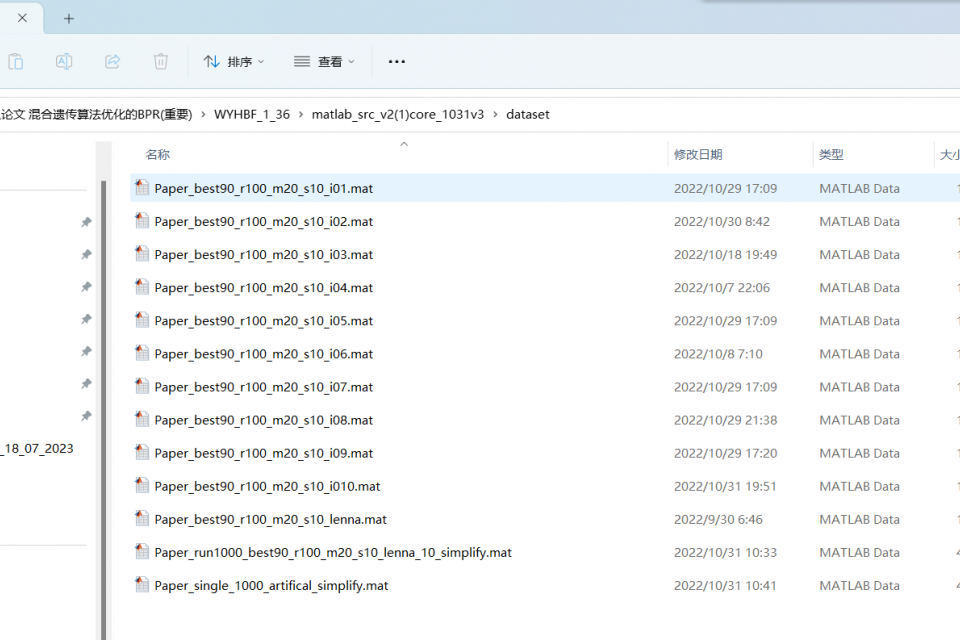

- % Table 2 is based on dataset Paper_best90_r100_m20_s10_i01.mat to Paper_best90_r100_m20_s10_i10.mat

- % These mat files are stored in the dataset folder

- % Generate all data in Table 2 row by row, and finally form Table 2

- % See result/Table 2.xls for data after running

- %%Program2: Paper_2GA_PK_one_image_multi_run_dynamic.m

- % Each row of data in Table 2 is generated from this file. Each run generates one row of data, 10 rows of data need to be run 10 times, and each sample image needs to be specified first.

- % Figure 6 and Figure 7 drawing data are generated from this file

- % All results of this procedure are stored in mat, such as: Paper_best90_r100_m20_s10_i01.mat

- %% 5.2 Comparison of the effects of three image restoration algorithms

- %%Program1:Paper_3BPR_PK_90_c100_m20_s10_lenna_static.m

- % Table 3, Table 4, Figure 6, Figure 7, Figure 8 and Figure 9 data are generated from this file

- % The program uses the result data set file as Paper_run1000_best90_100_m20_s10_lenna_10.mat

- % The generated results are in the result folder

- %%Program2:Paper_3BPR_PK_90_c100_m20_s10_lenna_dynamic.m

- % Table 3, Table 4, Figure 6, Figure 7, Figure 8, Figure 9 Data and Image Generation Files

- %%Program3: Paper_single_1000_artifical_static.m

- %Table 5, Figure 10, and Figure 11 Image generation files are generated directly from data sets, without training process

- %Table 5 Artificial image restoration effects

- %Figure 10. PSNR Comparison

- %Figure 11. MSE Comparison

- %%Program4: Paper_single_1000_artifical_dynamic.m

- % Table 5, Figure 10, Figure 11, generated by 1000 times of training

Dataset Files

- dataset1.zip (13.53 MB)

- dataset2.zip (7.84 MB)

- script.zip (11.36 MB)