A Wearable Vision-based System for Fall Detection

- Citation Author(s):

-

Hao-Chun WangEdward T.-H Chu

- Submitted by:

- Hao-Chun Wang

- Last updated:

- DOI:

- 10.21227/8rj8-8x87

49 views

49 views

- Categories:

- Keywords:

Abstract

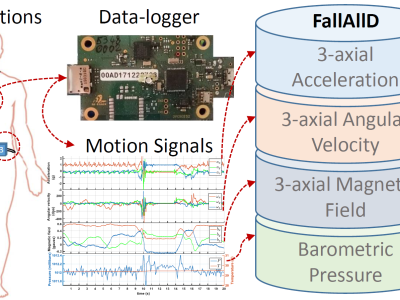

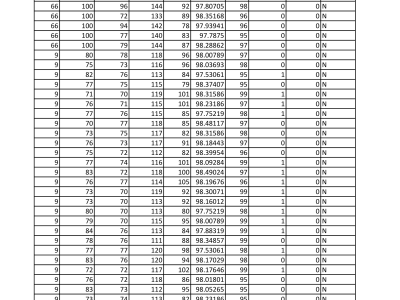

The CSV data files in the ZIP archive are analytical datasets extracted and processed from the RUG-EGO-FALL dataset, intended to support fall detection research using wearable first-person perspective devices. The data includes visual motion information for each video frame, calculated using the ORB (Oriented FAST and Rotated BRIEF) feature point algorithm in combination with the Lucas-Kanade optical flow method. Each CSV file contains the following columns: Frame (indicating the frame number of each image), Horizontal_Displacement (representing the horizontal motion of key feature points in that frame), Vertical_Displacement (representing the vertical motion), and Label (a fall indicator, where 1 denotes a fall event and 0 denotes a non-fall event). To standardize the training data, each fall video segment has been preprocessed to a uniform duration of 10 seconds (or at least 5 seconds for shorter clips), ensuring the full fall event is captured and extraneous motion is removed. This preprocessing enhances data consistency and training value. The dataset is suitable for training machine learning models for fall detection, direction analysis, and behavior recognition, serving as a critical foundation for modeling visual behavior patterns in fall events.

Instructions:

This dataset is derived from the RUG-EGO-FALL dataset and has been processed for feature extraction to support fall detection research. The extracted features are stored in CSV format for easy accessibility and analysis.