Datasets

Standard Dataset

NJU Auditory Attention Decoding Dataset

- Citation Author(s):

- Submitted by:

- Yuanming Zhang

- Last updated:

- Sat, 08/12/2023 - 09:39

- DOI:

- 10.21227/31nb-0j75

- Data Format:

- Research Article Link:

- License:

759 Views

759 Views- Categories:

- Keywords:

Abstract

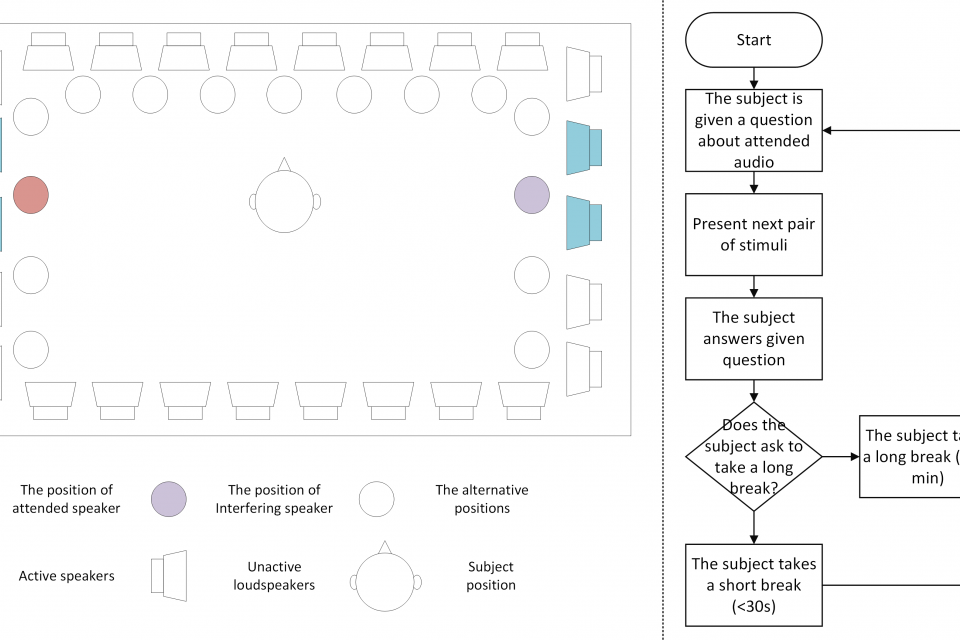

This is an auditory attention decoding dataset including EEG recordings of 21 subjects when they were instructed to attend to one of the two competing speakers at two different locations.

Unlike previous datasets (such as the KUL dataset), the locations of the two speakers are randomly drawn from fifteen alternatives.

All subjects have given formal written consent approved by the Nanjing University ethical committee before the experiment and received financial compensation upon completion.

The EEG data were recorded with the 32-channel EMOTIV Epoc Flex Saline system at a sampling rate of 128 Hz (downsampled from 1024 Hz) in a low-reverberant listening room.

For each subject, the experiment includes 32 trials. In each trial, the subject was exposed to a pair of randomly selected stimuli, the directions of whom were randomly drawn from the 15 possible competing speaker directions, i.e., ±135°, ±120°, ±90°, ±60°, ±45°, ±30°, ±15° and 0°.

This dataset provides the preprocessed EEG recordings and the aligned audio stimuli signals, as long as the attention information. All data are provided as zipped files.

Please contact the author at yuanming.zhang@smail.nju.edu.cn for additional information.

Note that there are 28 subjects participated in our experiments and the data of seven subjects were removed from further analysis due to device failure.

The dataset includes EEG signals and audio stimuli.

The EEG signals were preprocessed in EEGLAB and sliced into trials.

Data of each subject are stored in .mat files separately, including the EEG signal, attention information, and the competing speakers' information for each trial.

Note that as we manually removed some abnormal parts from the original EEG recordings besides ICA and filtering, the EEG duration of each trial is slightly different.

Once you have loaded the .mat file into MATLAB or Python, you can access the data and experiment information through the "data" and "expinfo" fields.

Data storage structure:

Sxx.mat: the subject number.

-"expinfo" is a table containing attention information.

--"attended_lr" indicates the relative attended direction (the relative direction of the attended speaker). "left" indicates the subject was instructed to attend to the speaker on the left side.

--"l_audio" and "r_audio" indicate the file name of the left and right competing speakers, respectively. You can directly extract the audio waveform from the "data" field.

--"azimuth" indicates the directions of the two competing speakers. As described in the abstract, the directions of the competing speakers are drawn randomly from fifteen alternates.

-"data" is the field containing audio and EEG information.

--"eeg": The EEG signals are stored in this field. One trial per cell.

--"leftWav" and "rightWav" contains the aligned audio stimuli of the left and right competing speakers. (Note: unprocessed audio signals. If you want to play back the signal through earphones, please apply an HRTF filter.)

--"dim"

---"chan" describes the names of EEG channels.

Dataset Files

- NJU Auditory Attention Decoding Dataset NJUNCA_preprocessed_arte_removed.7z (10.05 GB)

- script.7z (4.36 kB)