Datasets

Standard Dataset

Knowledge Representation Learning and Graph Attention Networks Dataset

- Citation Author(s):

- Submitted by:

- QING HE

- Last updated:

- Thu, 12/15/2022 - 02:42

- DOI:

- 10.21227/bg42-qk25

- License:

142 Views

142 Views- Categories:

0 ratings - Please login to submit your rating.

Abstract

we propose an improved knowledge representation learning model Cluster TransD and a recommendation model Cluster TransD-GAT based on knowledge graph and graph attention networks, where the Cluster TransD model reduces the number of entity projections, makes the association between entity representations, reduces the computational pressure, and makes it better to be applied to the large knowledge graph, and the Cluster TransD-GAT model can capture the attention of different users to different relationships of items. Extensive comparison and ablation experiments on three real datasets show that the model proposed in this paper has a significant performance improvement compared to other state-of-the-art models.

Instructions:

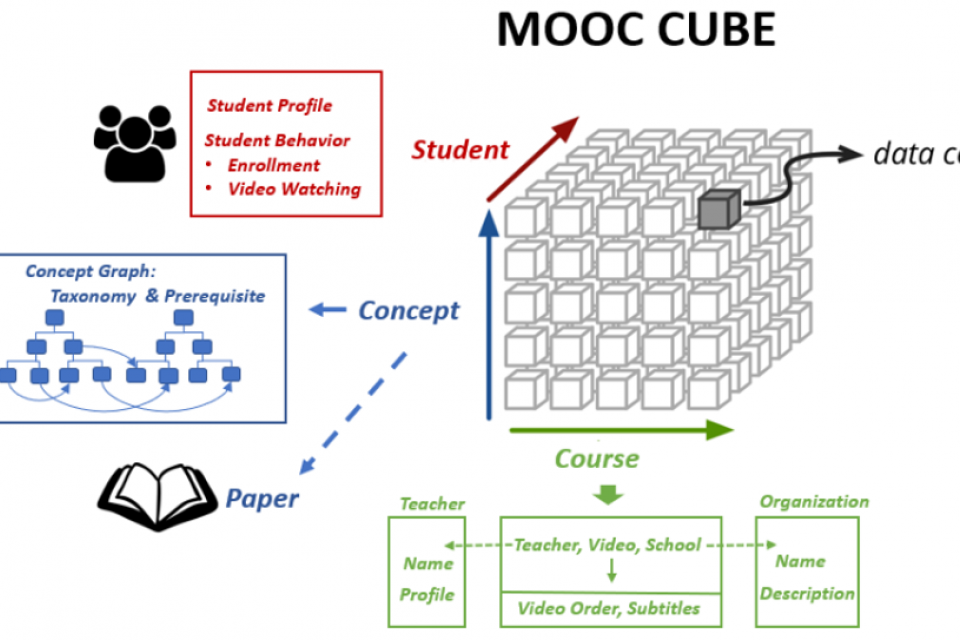

MoocCube

Documentation

| Attachment | Size |

|---|---|

| 18.51 KB |

Comments

Thank you!