EMG-EEG dataset for Upper-Limb Gesture Classification

- Citation Author(s):

- Submitted by:

- Boreom Lee

- Last updated:

- DOI:

- 10.21227/5ztn-4k41

- Data Format:

2876 views

2876 views

- Categories:

- Keywords:

Abstract

Electromyography (EMG) has limitations in human machine interface due to disturbances like electrode-shift, fatigue, and subject variability. A potential solution to prevent model degradation is to combine multi-modal data such as EMG and electroencephalography (EEG). This study presents an EMG-EEG dataset for enhancing the development of upper-limb assistive rehabilitation devices. The dataset, acquired from thirty-three volunteers without neuromuscular dysfunction or disease using commercial biosensors is easily replicable and deployable. The dataset consists of seven distinct gestures to maximize performance on the Toronto Rehabilitation Institute hand function test and the Jebsen-Taylor hand function test. The authors aim for this dataset to benefit the research community in creating intelligent and neuro-inspired upper limb assistive rehabilitation devices.

Instructions:

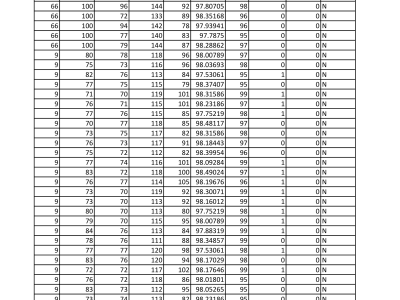

The acquisition of the electromyography data (EMG) was performed using the defunct Myo armband, consisting of 8-channels with a 200 Hz sampling frequency. The EMG data, acquired from the upper-limb at maximum voluntary contraction, remains raw and unfiltered. In addition, electroencephalography (EEG) data was collected through the use of openBCI Ultracortex IV, composed of 8-channels with a 250 Hz sampling frequency. The dataset is accessible both in .CSV and .MAT formats, with individual subject data in a singular directory. A supervised machine learning approach can be undertaken while utilizing the naming nomenclature of the files. The file name designates the subject as S{}, repetition as R{}, and gesture as G{} respectively. The dataset consists of six repetitions per gesture and seven gestures in total. The gesture numbering scheme is as follows: G1 represents a large diameter grasp, G2 a medium diameter grasp, G3 a three-finger sphere grasp, G4 a prismatic pinch grasp, G5 a power grasp, G6 a cut grasp, and G7 an open hand. Detailed description of the dataset including starter code can be found here: https://github.com/HumanMachineInterface/Gest-Infer

Dear Sir,

I would like to access the above dataset for an undergraduate project at the University of Peradeniya.

Thank you very much in advance.

Best regards

Ruwan

Subject: Cue Timing Inquiry - EMG-EEG Gesture Dataset

Dear Dr. Lee,

I'm Salsabil Jaballah, a student intern at TU chemnitz. I plan to use your EMG-EEG dataset for my project on predicting hand gesture intentions (before execution).

My project focuses on intention prediction. Specifically, I am investigating the feasibility of predicting hand gestures milliseconds before they are physically executed by analyzing neural patterns and subtle muscle activations within the EEG and EMG signals.

My question is regarding the timing of the "cue" presented to the subjects during data acquisition. Was the precise timestamp or timepoint of the cue presentation recorded alongside the EEG and EMG data? Knowing the exact cue time is important for accurately isolating the pre-movement neural activity and training a predictive model for gesture intention.

Thank you!

Best regards,

Salsabil Jaballah