Accuracy, latency and overhead test in Federated Deep Reinforcement Learning, L2FPPO in Focus

- Citation Author(s):

- Submitted by:

- Kofi Kwarteng Abrokwa

- Last updated:

- DOI:

- 10.21227/5g3r-3n31

- Data Format:

11 views

11 views

- Categories:

- Keywords:

Abstract

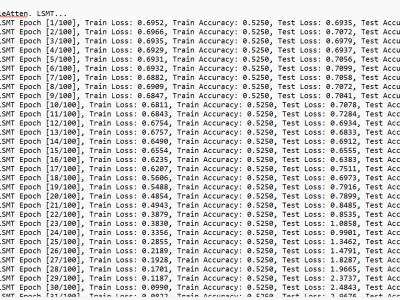

With the advent of 6G Open-RAN architecture, multiple operational services can be simultaneously executed in RAN, leveraging the near-Real-Time Radio Intelligent Controller (near-RT-RIC) and real-time (RT) nodes. The architecture provides an ideal platform for Federated Learning (FL): The xAPP is hosted in the near-RT-RIC to perform global aggregation, whereas the Open Radio Unit (ORU) allocates power to users to participate in FL in a RT manner. This paper identifies power and latency optimization as critical factors for enhancing FL in a stochastic environment. Due to the complexity of the problem involving multi-objective constraints, we formulate the problem as a mixed-integer nonlinear programming problem, then decompose the problem into modular units to find the perfect solutions. We employ dual Lagrange decomposition to derive optimal power allocation solutions, thereby improving user association with the ORU during FL. We introduce the Lyapunov Drift Plus Penalty framework to address latency issues during FL. Lastly, the Proximal Policy Optimization is utilized to solve for users’ weights to upload to ORU. Empirical results demonstrate that our approach achieves a 6\% improvement in accuracy, a 0.5\% reduction in overhead, a 0.6\% reduction in energy consumption, and a 0.4\% reduction in latency compared to state-of-the-art methods.

Instructions:

This data originates from leveraging Dual Lagrange Decomposition (DLD) to enhance user-to-ORU connectivity during federation rounds. The DLD calculates the required power for each user, while the Lyapunov Drift Plus Penalty (LDPP) adjusts the computational and transmission resources of the ORU simultaneously. These algorithms perform effectively regardless of hyperparameters, including the Lagrangian dual form step size or the tuning step of the Lyapunov penalty.

Notably, this approach is integrated into the PPO code to facilitate communication between the server and users during federation rounds. Depending on the environment, an optimal learning rate for PPO and suitable Adam optimizer settings can be selected to achieve better performance. In our simulations, we use a learning rate of 0.01 to achieve desirable outcomes.