Datasets

Standard Dataset

AoI Minimization in Energy Harvesting and Spectrum Sharing Enabled 6G Networks

- Citation Author(s):

- Submitted by:

- Amir Hossein Zarif

- Last updated:

- Thu, 05/20/2021 - 02:10

- DOI:

- 10.21227/aynt-my59

- License:

529 Views

529 Views- Categories:

Abstract

Simulation code for the paper: "AoI Minimization in Energy Harvesting and Spectrum Sharing Enabled 6G Networks"

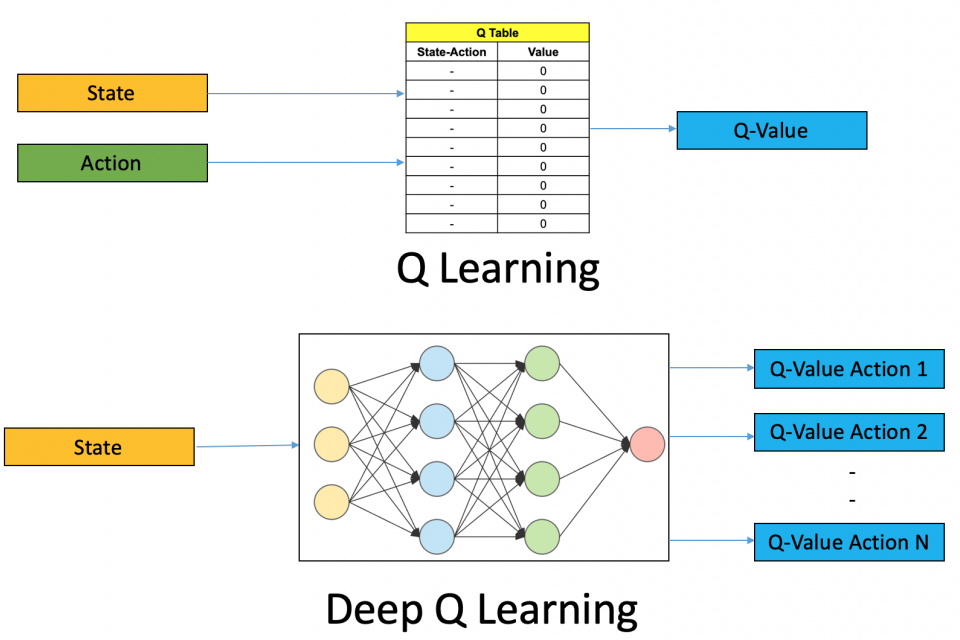

we use a neural network to approximate the Q-value function. The state is given as the input and the Q-value of all possible actions is generated as the output.

Prerequisites:

python 3.7 or higher

PyTorch 1.7 or higher + CUDA

It is recommended that the latest drivers be installed for the GPU.

---------------------------------------------------------------------------------------

In order to run the code:

1- Install all the necessary prerequisites.

2- Change the powers, battery capacity, and distance.

3- Once you run the code, simulation results will be saved into the your directory.

You can import these data wherever you want (Matlab, python, etc.) and plot the results.

4- Except for Figs. 3 and 4, which can be directly obtained through reward plot,

in order to plot the other figures, the results should be averaged with respect to the agent.

5- Figs. 5, 6 and 7, are plotted as follows:

Run DQN network, and average the results of (AgeInformation.txt) based on

different battery capacity to produce the first plot.

Run DQN network, and average the results of (AgeInformation.txt) based on

different power of primary user to produce the second plot.

Run DQN network, and average the results of (RateSU.txt) based on

different power of primary user to produce the third plot.

Documentation

| Attachment | Size |

|---|---|

| 1.54 KB |

Comments

where is your dueling DQN module?