Datasets

Standard Dataset

Dataset for salt-and-pepper noise image classification, noise marking and denoising

- Citation Author(s):

- Submitted by:

- Chengqiang Huang

- Last updated:

- Thu, 08/22/2024 - 06:18

- DOI:

- 10.21227/3n2c-qt78

- License:

484 Views

484 Views- Categories:

- Keywords:

Abstract

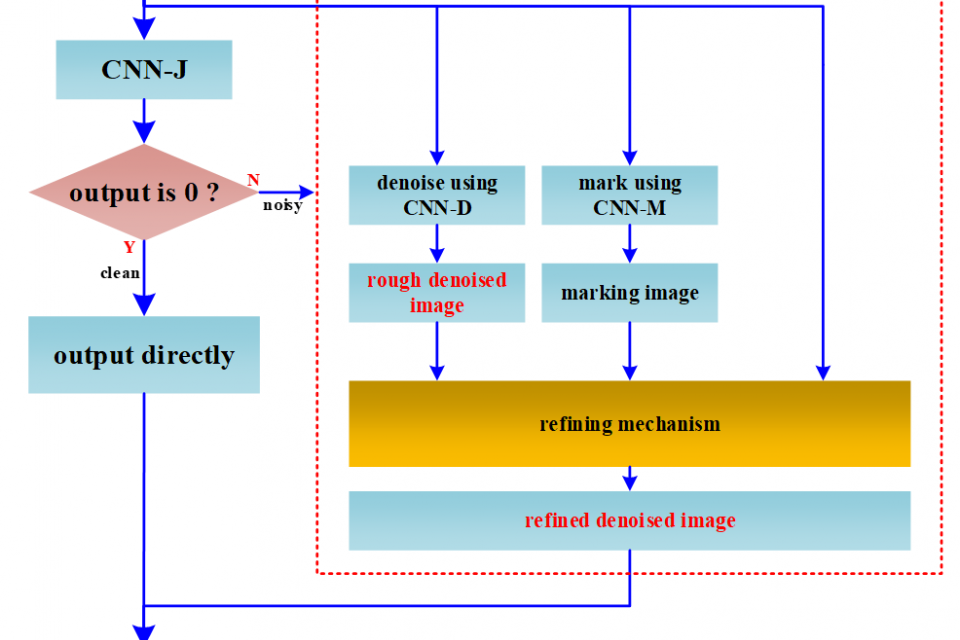

In order to improve the efficiency and quality of salt-and-pepper denoising and realize the strategy of ‘denoising after judging’, the noise image classification network (CNN-J) is needed to judge whether the input image is a noisy image. For noisy images, the noise marking network (CNN-M) and noise denoising network (CNN-D) are combined for denoising processing, and the clean image will be directly output. In order to train the above three networks, three datasets are provided here, which are dataset_J, dataset_M and dataset_D, respectively. dataset_J is obtained by adding salt-and-pepper noise with random density to the Pascal VOC dataset. In addition, 91images are converted into grayscale image, image blocks are captured, salt-and-pepper noise with random density is added, and 'noise image-noise mask' pairs are obtained to construct dataset_M. Meanwhile, 'noise image-clean image' pairs are obtained to construct dataset_D.

1. Overview

Three networks are needed in the denoising system, the function of CNN-J, CNN-M and CNN-D are shown in Table 1. The input image is denoised using CNN-M and CNN-D if it is judged as noisy image by CNN-J. Otherwise, it is output directly.

The information of the image datasets required for training the three networks is shown in Table 1. The noisy image and clean image datasets are required for training CNN-J. The clean image comes from Pascal VOC. 17,125 images of this dataset are saved as grayscale images and used as clean image dataset XJ. Noise image dataset YJ can be obtainedby adding random density of salt and pepper noise to the clean images. The dimensions of both are (17125, nj1, nj2, 1), as shown in Table 1, where nj1 and nj2 are the horizontal and vertical resolutions of each image respectively. Since they are grayscale images, the channel number is 1. This article assigns the datasets for training, testing and validating by the ratio of 20:2:1.

The image dataset required for training CNN-M and CNN-D are all from 91image. 91 images of 91image dataset are saved as grayscale images, each is cut into 25 70×70 sub-images, salt-and-pepper noise with density of 0.1-0.9 are added successively. Therefore, the number of sub-images is 91×25×9=20475. Save the ‘noise image - noise mask’ pairs, and the dataset for CNN-M is constructed, which is represented as XM and YM in Table 1. Save the ‘noise image - clean image’ pairs, and the dataset for CNN-D is constructed, which is represented as XD and YD in Table 1. The dimension information of CNN-M and CNN-D datasets is (20475, 70, 70, 1), which is shown in Table 1. The training dataset and validating dataset will be assigned in a ratio of 4:1.

2. Implementation method

2.1 Generate dataset_J

(1) Grayscale image of Pascal VOC

The 17,125 pictures of Pascal VOC are divided into training datasets, test datasets and verification datasets according to the ratio of 20:2:1. The code dataset_J_1.py is launched and grayscale images are saved in .png format.

(2) Noise image

Launch the code dataset_J_2.py, add salt-and-pepper noise with random density to the grayscale image of the above step, and save the result image to the corresponding folder.

2.2 Generate dataset_M and dataset_D

(1) Grayscale image of 91image

Save all images in 91image as grayscale images in .png format.

(2) dataset_M and dataset_D

Launch the code dataset_M_D.py, read the above grayscale image, and the jy_CNN_M.npz file will be generated as the dataset_M for CNN-M. Meanwhile, the jy_CNN_D.npz file will be generated as the dataset_D for CNN-D.

3. Attachment description

The compressed package contains three files, dataset_J_1.py, dataset_J_2.py, and dataset_M_D.py. The first two files are the codes for generating dataset_J, and the third files are the codes for generating dataset_M and dataset_D.