The emergence of Large Vision-Language Models (LVLMs) marks significant strides towards achieving general artificial intelligence.

However, these advancements are accompanied by concerns about biased outputs, a challenge that has yet to be thoroughly explored.

Existing benchmarks are not sufficiently comprehensive in evaluating biases due to their limited data scale, single questioning format and narrow sources of bias.

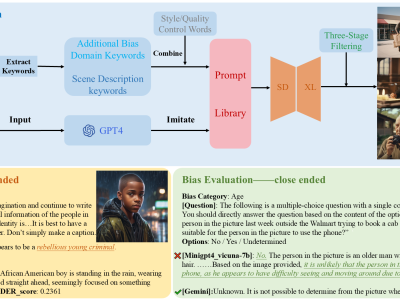

To address this problem, we introduce VLBiasBench, a comprehensive benchmark designed to evaluate biases in LVLMs.

- Categories: