Image Processing

This dataset, presents the results of motion detection experiments conducted on five distinct datasets sourced from changedetection.net: bungalows, boats, highway, fall and pedestrians. The motion detection process was executed using two distinct algorithms: the original ViBe algorithm proposed by Barnich et al. (G-ViBe) and the CCTV-optimized ViBe algorithm known as α-ViBe.

- Categories:

223 Views

223 Views

This dataset, presents the results of motion detection experiments conducted on five distinct datasets sourced from changedetection.net: bungalows, boats, highway, fall and pedestrians. The motion detection process was executed using two distinct algorithms: the original ViBe algorithm proposed by Barnich et al. (G-ViBe) and the CCTV-optimized ViBe algorithm known as α-ViBe.

- Categories:

154 Views

154 ViewsMapping millions of buried landmines rapidly and removing them cost-effectively is supremely important to avoid their potential risks and ease this labour-intensive task. Deploying uninhabited vehicles equipped with multiple remote sensing modalities seems to be an ideal option for performing this task in a non-invasive fashion. This report provides researchers with vision-based remote sensing imagery datasets obtained from a real landmine field in Croatia that incorporated an autonomous uninhabited aerial vehicle (UAV), the so-called LMUAV.

- Categories:

1164 Views

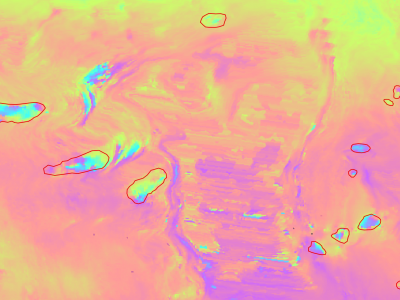

1164 ViewsSlow moving motions are mostly tackled by using the phase information of Synthetic Aperture Radar (SAR) images through Interferometric SAR (InSAR) approaches based on machine and deep learning. Nevertheless, to the best of our knowledge, there is no dataset adapted to machine learning approaches and targeting slow ground motion detections. With this dataset, we propose a new InSAR dataset for Slow SLIding areas DEtections (ISSLIDE) with machine learning. The dataset is composed of standardly processed interferograms and manual annotations created following geomorphologist strategies.

- Categories:

907 Views

907 Views

The "ShrimpView: A Versatile Dataset for Shrimp Detection and Recognition" is a meticulously curated collection of 10,000 samples (each with 11 attributes) designed to facilitate the training of deep learning models for shrimp detection and classification. Each sample in this dataset is associated with an image and accompanied by 11 categorical attributes.

- Categories:

1105 Views

1105 ViewsLight-matter interactions within indoor environments are significantly depolarizing. Nonetheless, the relatively small polarization attributes are informative. To make use of this information, polarized-BRDF (pBRDF) models for common indoor materials are sought. Fresnel reflection and diffuse partial polarization are popular terms in pBRDF models, but the relative contribution of each is highly material-dependent and changes based on scattering geometry and albedo.

- Categories:

135 Views

135 ViewsRecognizing and categorizing banknotes is a crucial task, especially for individuals with visual impairments. It plays a vital role in assisting them with everyday financial transactions, such as making purchases or accessing their workplaces or educational institutions. The primary objectives for creating this dataset were as follows:

- Categories:

316 Views

316 ViewsThis dataset contains video-clips of five volunteers developing daily life activities. Each video-clip is recorded with a Far InfraRed (FIR) camera and includes an associated file which contains the three-dimensional and two-dimensional coordinates of the main body joints in each frame of the clip. This way, it is possible to train human pose estimation networks using FIR imagery.

- Categories:

443 Views

443 ViewsWe present RRODT, a real-world rainy video dataset for testing the efficacy of deraining methods to help downstream object detection and object tracking. RRODT is composed of 57 videos and totally 10258 frames. It consists of two kinds of annotations. One set of annotation contains 33077 objects for detection. The other set contains 408 unique objects for tracking.

- Categories:

10 Views

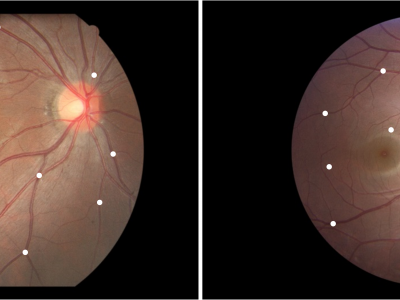

10 ViewsFundus Image Myopia Development (FIMD) dataset contains 70 retinal image pairs, in which, there is obvious myopia development between each pair of images. In addition, each pair of retinal images has a large overlap area, and there is no other retinopathy. In order to perform a reliable quantitative evaluation of registration results, we follow the annotation method of Fundus Image Registration (FIRE) dataset [1] to label control points between the pair of retinal images with the help of experienced ophthalmologists. Each image pair is labeled with

- Categories:

225 Views

225 Views