Machine Learning

Five users aged 23, 25, 31, 42, and 46 participated in the experiment. The users sat comfortably in a chair. A green LED of 1 cm diameter was placed at a distance of about 1 meter from a person's eyes. EEG signals were recorded using g.USBAmp with 16 active electrodes. The users were stimulated with flickering LED lights with frequencies: 5 Hz, 6 Hz, 7 Hz, and 8 Hz. The stimulation lasted 30 seconds. The recorded signals were divided into the data used for training, the first 20 seconds, and the data used for testing, the next 10 seconds, for each signal.

- Categories:

691 Views

691 Views

For the purpose of experimentation, the historical stock prices of three petroleum companies: Pakistan State Oil (PSO), Hascol, and Attock Petroleum Limited (APL), are extracted from the Pakistan Stock Exchange (PSX) website through a web scrapper for the last four years. Different attributes related to the stocks of each of these companies are extracted for each day. Along with this, for each of these companies, Twitter data for sentiment analysis is also extracted using Twint.

- Categories:

442 Views

442 ViewsIn this study, we present advances on the development of proactive control for online individual user adaptation in a welfare robot guidance scenario, with the integration of three main modules: navigation control, visual human detection, and temporal error correlation-based neural learning. The proposed control approach can drive a mobile robot to autonomously navigate in relevant indoor environments. At the same time, it can predict human walking speed based on visual information without prior knowledge of personality and preferences (i.e., walking speed).

- Categories:

231 Views

231 ViewsA mobile sensor can be described as a kind of smart technology that can capture minor or major changes in an environment and can respond by performing a particular task. The scope of the dataset is for forensic purposes that will help segregate day-to-day activities from criminal actions. Smartphones supplied with sensors can be utilised for monitoring and recording simple daily activities such as walking, climbing stairs, eating and more. For the generation of this dataset, we have collected data for 13 classes of daily life activities, which has been done by a single individual.

- Categories:

288 Views

288 Views

Using Python. we crawl a total of 18, 793 diabetes related Q&A between Jun. 1, 2016 and Sept. 1, 2020 on xywy.com, a famous Chinese Online Medical Community. Each data contains four parts of the question detail page: Title, Problem Description, User ID and Question Time, and three parts of the doctor’s answer page: Doctor ID, Answer Content and Answer Time. After preprocessing such as cleaning and deduplication, we finally obtain 18,521 valid data.

- Categories:

13 Views

13 ViewsThis dataset provides Channel Impulse Response (CIR) measurements from standard-compliant IEEE 802.11ay packets to validate Integrated Sensing and Communication (ISAC) methods. The CIR sequences contain reflections of the transmitted packets on people moving in an indoor environment. They are collected with a 60 GHz software-defined radio experimentation platform based on the IEEE 802.11ay Wi-Fi standard, which is not affected by frequency offsets by operating in full-duplex mode.

The dataset is divided into two parts:

- Categories:

1738 Views

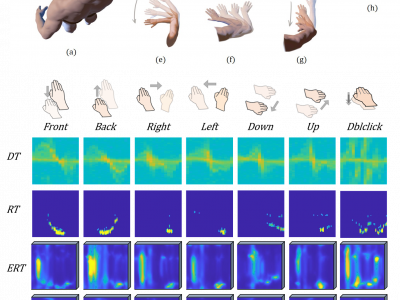

1738 ViewsRadar-based dynamic gesture recognition has a broad prospect in the field of touchless Human-Computer Interaction (HCI) due to its advantages in many aspects such as privacy protection and all-day working. Due to the lack of complete motion direction information, it is difficult to implement existing radar gesture datasets or methods for motion direction sensitive gesture recognition and cross-domain (different users, locations, environments, etc.) recognition tasks.

- Categories:

762 Views

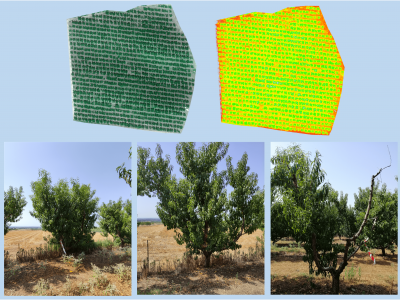

762 ViewsThis peach tree disease detection dataset is a multimodal, multi-angle dataset which was constructed for monitoring the growth of peach trees, including stress analysis and prediction. An orchard of peach trees is considered in the area of Thessaly, where 889 peach trees were recorded in a full crop season starting from Jul. 2021 to Sep. 2022. The dataset includes a) aerial / Unmanned Aerial Vehicle (UAV) images, b) ground RGB images/photos, and c) ground multispectral images/photos.

- Categories:

2029 Views

2029 Views